May 23, 2025

(the code used is available at https://github.com/csdurfee/nfl_combine_data/).

Intro

Every year, the National Football League hosts an event called the Combine, where teams can evaluate the top prospects before the upcoming draft.

Athletes are put through a series of physical and mental tests over the course of four days. There is a lot of talk of hand size, arm length, and whether a guy looks athletic enough when he's running with his shirt off. It's basically the world's most invasive job interview.

NFL teams have historically put a lot of stock in the results of the combine. A good showing at the combine can improve a player's career prospects, and a bad showing can significantly hurt them. For that reason, some players will opt out of attending the combine, but that can backfire as well.

I was curious about which events in the combine correlate most strongly with draft position. There are millions of dollars at stake. The first pick in the NFL draft gets a $43 Million dollar contract, the 33rd pick gets $9.6 Million, and the 97th pick gets $4.6 Million.

The main events of the combine are the 40 yard dash, vertical leap, bench press, broad jump, 3 cone drill and shuttle drill. The shuttle drill and the 3 cone drill are pretty similar -- a guy running between some cones as fast as possible. The other drills are what they sound like.

I'm taking the data from Pro Football Reference. Example page: https://www.pro-football-reference.com/draft/2010-combine.htm. I'm only looking at players who got drafted.

Position Profiles

It makes no sense to compare a cornerback's bench press numbers to a defensive lineman's. There are vast differences in the job requirements. A player in the combine is competing against other players at the same position.

The graph shows a position's performance on each exercise relative to all players. The color indicates how the position as a whole compares to the league as a whole. You can change the selected position with the dropdown.

Cornerbacks are exceptional on the 40 yard dash and shuttle drills compared to NFL athletes as a whole, whereas defensive linemen are outliers when it comes high bench press numbers, and below average at every other event. Tight Ends and Linebackers are near the middle in every single event, which makes sense because both positions need to be strong enough to deal with the strong guys, and fast enough to deal with the fast guys.

Importance of Events by Position

I analyzed how a player's performance relative to others at their position correlates with draft rank. Pro-Football-Reference has combine data going back to 2000. I have split the data up into 2000-2014 and 2015-2025 to look at how things have changed.

For each position, the exercises are ranked from most to least important. The tooltip gives the exact r^2 value.

Here are the results up to 2014:

Here are the last 10 years:

Some things I notice:

The main combine events matter that much either way for offensive and defensive linemen. That's held true for 25 years.

The shuttle and 3 cone drill have gone up significantly in importance for tight ends.

Broad jump and 40 yard dash are important for just about every position. However, the importance of the 40 yard dash time has gone down quite a bit for running backs.

As a fan, it used to be a huge deal when a running back posted an exceptional 40 yard time. It seemed Chris Johnson's legendary 4.24 40 yard time was referenced every year. But I remember there being lot of guys who got drafted in the 2000's primarily based on speed who turned out to not be very good.

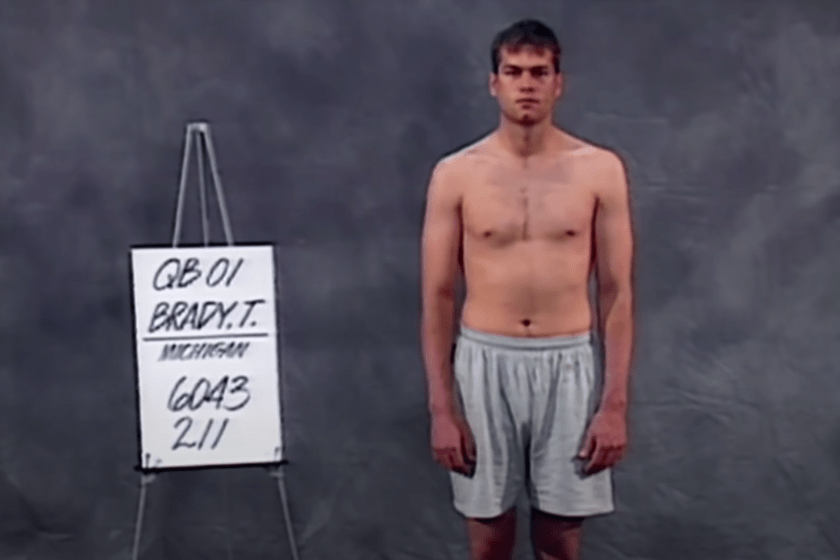

The bench press is probably the least important exercise across the board. There's almost no correlation between performance and draft order, for every position. Offensive and defensive linemen basically bench press each other for 60 minutes straight; for everybody else, that sort of strength is less relevant. Here's one of the greatest guys at throwing the football in human history, Tom Brady:

Compared to all quarterbacks who have been drafted since 2000, Brady's shuttle time was in the top 25%, his 3 cone time was in the top 50%, and his broad jump, vertical leap and 40 yard dash were all in the bottom 25%.

Changes in combine performance over time

Athlete performance has changed over time.

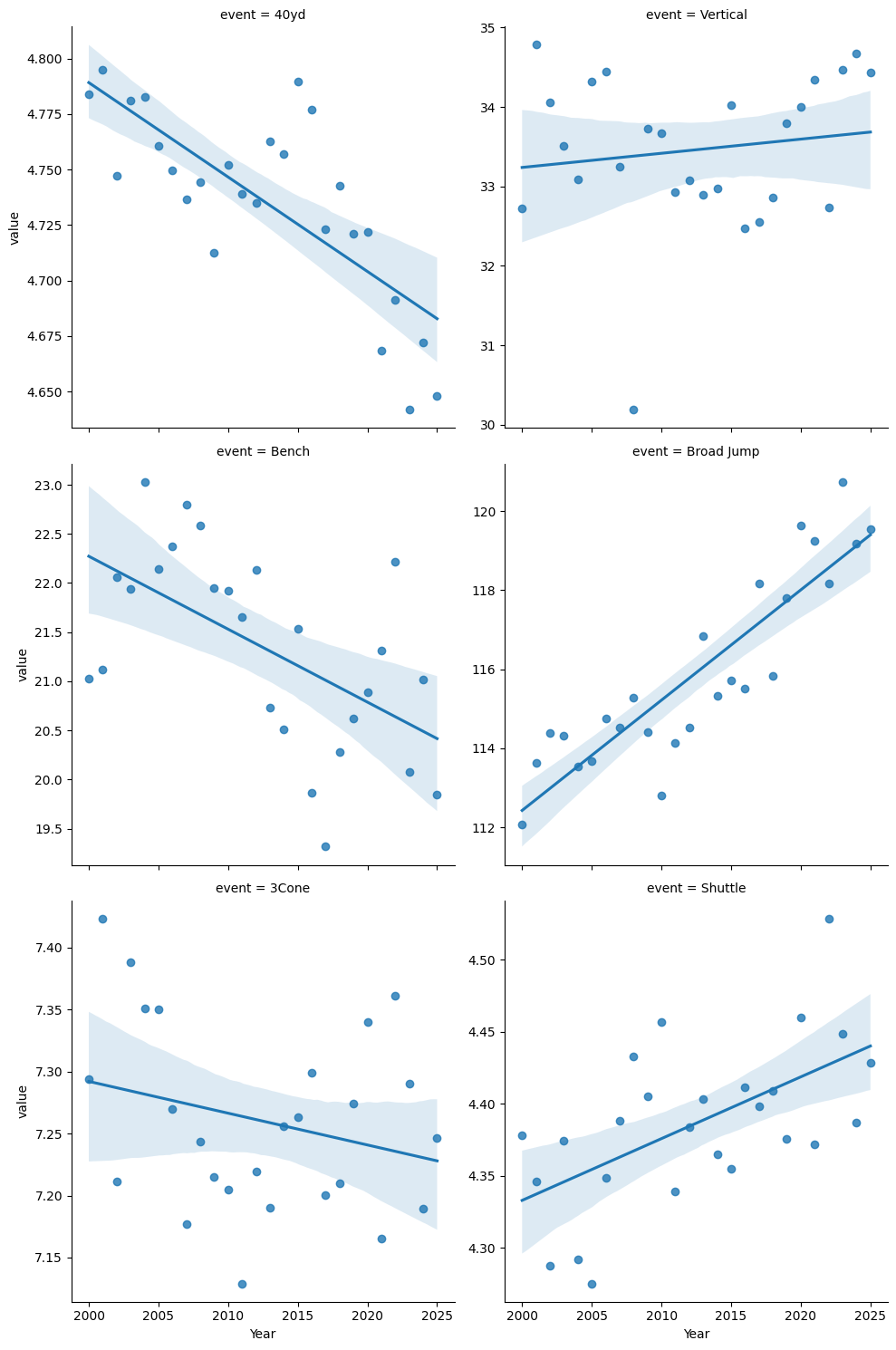

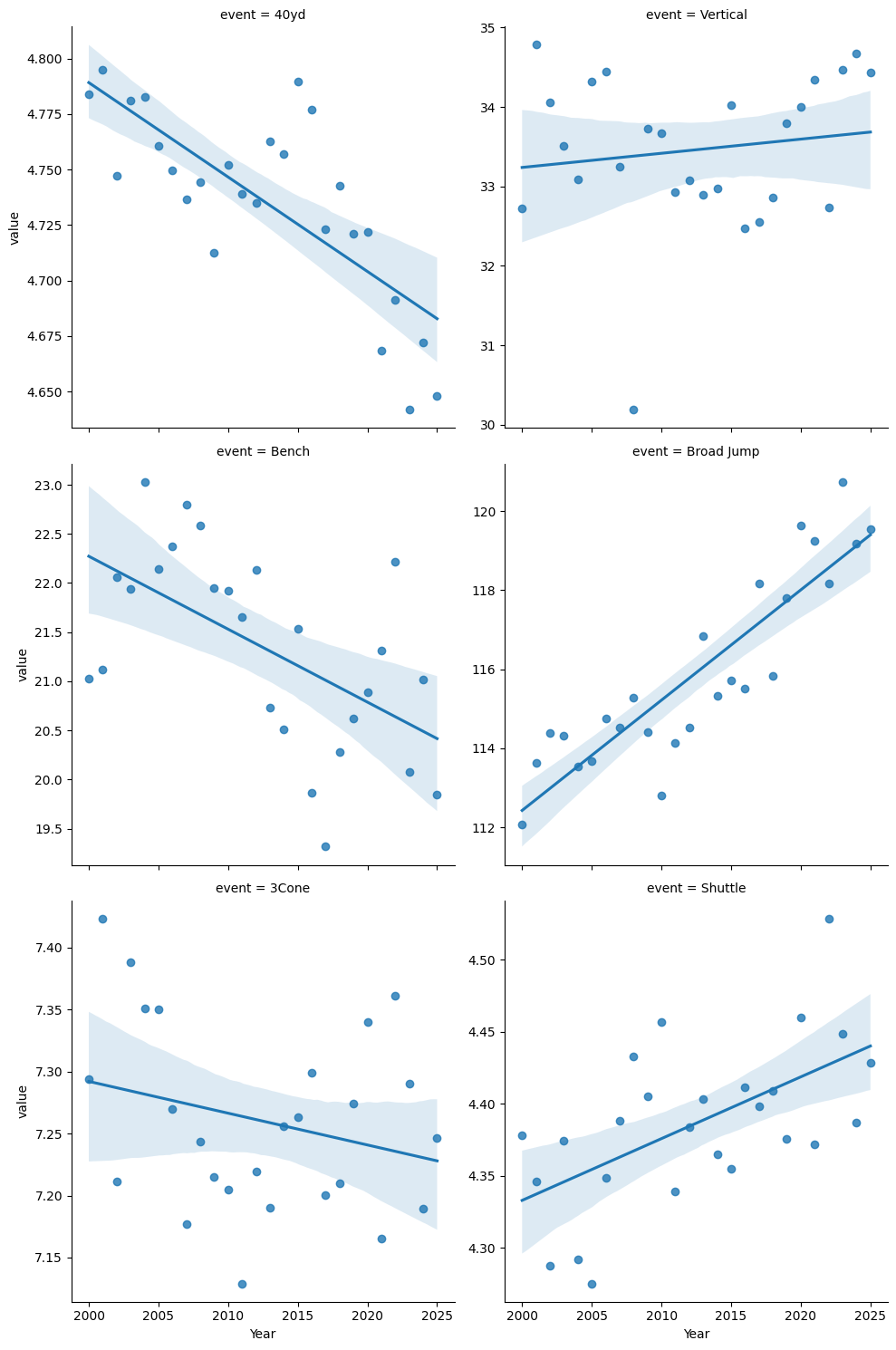

I've plotted average performance by year for each of the events. For the 40 yard dash, shuttle, and 3 cone drills, lower is better, and for the other events, higher is better.

40 yard dash times and broad jump distances have clearly improved, whereas shuttle times and bench press reps have gotten slightly worse.

There's a cliche in sports that "you can't coach speed". While some people are innately faster than others, the 40 yard dash is partly a skill exercise -- learning to get off the block as quickly as possible without faulting, for starters. The high priority given to the 40 yard dash should lead to prospects practicing it more, and thus getting better numbers.

The bench press should be going down or staying level, since it's not very important to draft position.

There's been a significant improvement in the broad jump - about 7.5% over 25 years. As with the 40 yard dash, I'd guess it's better coaching and preparation. Perhaps it's easier to improve than some of the other events. I don't think there's more broad jumping in an NFL game than there was 25 years ago.

Shuttle times getting slightly worse is a little surprising. It's very similar to the 3 Cone drill, which has slightly improved. But as we saw, neither one is particularly important as far as draft position, and it's not a strong trend.

Caveats

Some of the best athletes skip the combine entirely, because their draft position is already secure. And some athletes will only choose to do the exercises they think they will do well at, and skip their weak events. This is known as MNAR data (missing, not at random). All analysis of MNAR data is potentially biased.

I'm assuming a linear relationship between draft position and performance. It's possible that a good performance helps more than a bad performance hurts, or vice versa.

I didn't calculate statistical significance for anything. Some correlations will occur even in random data. This isn't meant to be rigorous.

May 22, 2025

(notebook is available at github.com/csdurfee/ensemble_learning.)

Ensemble Learning

AI and machine learning systems are often used for classification. Is this email spam or not? Is this person a good credit risk or not? Is this a photo of a cat or not?

There are a lot of ways to build classifiers, and they all potentially have different strengths and weaknesses. It's natural to try combining multiple models together to produce better results than the individual models would.

Model A might be bad at classifying black cats but good at orange ones, model B might be bad at classifying orange cats but good at black ones, model C is OK at both. So if we average together the results of the three classifiers, or go with the majority opinion between them, the results might be better than the individual classifiers.

This is called an ensemble. Random forests and gradient boosting are two popular machine learning techniques that use ensembles of weak learners -- a large number of deliberately simple models that are all trained on different subsets of the data. This strategy can lead to systems that are more powerful than their individual components. While each little tree in a random forest is weak and prone to overfitting, the forest as a whole can be robust and give high quality predictions.

Majority Voting

We can also create ensembles of strong learners -- combining multiple powerful models together. Each individual model is powerful enough to do the entire classification on its own, but we hope to achieve higher accuracy by combining their results. The most common way to do that is with voting. Query several classifiers, and have the ensemble return the majority pick, or otherwise combine the results.

There are some characteristics of ensembles that seem pretty common sense [1]. The classifiers in the ensemble need to be diverse: as different as possible in the mistakes they make. If they all make the same mistakes, then there's no way for the ensemble to correct for that.

The more classification categories, the more classifiers are needed in the ensemble. However, in real world settings, there's usually a point where adding more classifiers doesn't improve the ensemble.

The Model

I like building really simple models. They can illustrate fundamental characteristics, and show what happens at the extremes.

So I created an extremely simple model of majority voting (see notebook). I'm generating a random list of 0's and 1's, indicating the ground truth of some binary classification problem. Then I make several copies of the ground truth and randomly flip x% of the bits. Each of those copies represent the responses from an individual classifier within the ensemble. Each fake classifier will have 100-x% accuracy. There's no correlation between the wrong answers that each classifier gives, because the changes were totally random.

For every pair of fake classifiers with 60% accuracy, they will both be right 60% * 60% = 36% of the time, and both wrong 40% * 40% = 16%. So they will agree 36% + 16% = 52% of the time at minimum.

That's different from the real world. Machine learning algorithms trained on the same data will make a lot of the same mistakes and get a lot of the same questions right. If there are outliers in the data, any classifier can overfit on them. And they're all going to find the same basic trends in the data. If there aren't a lot of good walrus pictures in the training data, every model is probably going to be bad at recognizing walruses. There's no way to make up for what isn't there.

Theory vs Reality

In the real world, there seem to be limits on how much an ensemble can improve classification. On paper, there are none, as the simulation shows.

What is the probability of the ensemble being wrong about a particular classification?

That's the probability that the majority of the classifiers predict 0, given that the true value is 1 (and vice versa). If each classifier is more likely to be right than wrong, as the number of classifiers goes to infinity, the probability of the majority of predictions being wrong goes to 0.

If each binary classifier has a probability > .5 of being right, we can make the ensemble arbitrarily precise if we add enough classifiers to the ensemble (assuming their errors are independent). We could grind the math using the normal approximation to get the exact number if need be.

Let's say each classifier is only right 50.5% of the time. We might have to add 100,000 of them to the ensemble, but we can make the error rate arbitrarily small.

Correlated errors ruin ensembles

The big difference between my experiment and reality is that the errors the fake classifiers make are totally uncorrelated with each other. I don't think that would ever happen in the real world.

The more the classifiers' wrong answers are correlated with each other, the less useful the ensemble becomes. If they are 100% correlated with each other, the ensemble will give the exact same results as the individual classifiers, right? An ensemble doesn't have to improve results.

To put it in human terms, the "wisdom of the crowd" comes from people in the crowd having wrong beliefs about uncorrelated things (and being right more often than not overall). If most people are wrong in the same way, there's no way to overcome that with volume.

My experience has been that different models tend to make the same mistakes, even if they're using very different AI/machine learning algorithms, and a lot of that is driven by weaknesses in the training data used.

For a more realistic scenario, I created fake classifiers with correlated answers, so that they agree with the ground truth 60% of the time, and with each other 82% of the time, instead of the minimum 52% of the time.

The Cohen kappa score is .64, on a scale from -1 to 1, so they aren't as correlated as they could be.

The simulation shows that if the responses are fairly strongly correlated with each other, there's a hard limit to how much the ensemble can improve things.

Even with 99 classifiers in the ensemble, the simulation only achieves an f1 score of .62. That's just a slight bump from the .60 achieved individually. There is no marginal value to adding more than 5 classifiers to the ensemble at this level of correlation.

Ensembles: The Rich Get Richer

I've seen voting ensembles suggested for especially tricky classification problems, where the accuracy of even the best models is pretty low. I haven't found that to be true, though, and the simulation backs that up. Ensembles are only going to give a significant boost for binary classification if the individual classifiers are significantly better than 50% accuracy.

The more accurate the individual classifiers, the bigger the boost from the ensemble. These numbers are for an ensemble of 3 classifiers (in the ideal case of no correlation between their responses):

| Classifier Accuracy |

Ensemble Accuracy |

| 55% |

57% |

| 60% |

65% |

| 70% |

78% |

| 80% |

90% |

Hard vs. soft voting

There are two different ways of doing majority voting, hard and soft. This choice can have an impact on how well an ensemble works, but I haven't seen a lot of guidance on when to use each.

Hard voting is where we convert the outputs of each binary classifier into a boolean yes/no, and go with the majority opinion. If there are an odd number of components and it's a binary classification, there's always going to be a clear winner. That's what I've been simulating so far.

Soft voting is where we combine the raw outputs of all the components, and then round the combined result to make the prediction. sklearn's documentation advises to use soft voting "for an ensemble of well-calibrated classifiers".

In the real world, binary classifiers don't return a nice, neat 0 or 1 value. They return some value between 0 or 1 indicating a relative level of confidence in the prediction, and we round that value to 0 or 1. A lot of models will never return a 0 or 1 -- for them, nothing is impossible, just extremely unlikely.

If a classifier returns .2, we can think of it as the model giving a 20% chance that the answer is 1 and an 80% chance it's 0. That's not really true, but the big idea is that there's potentially additional context that we're throwing away by rounding the individual results.

For instance, say the raw results are [.3,.4,.9]. With hard voting, these would get rounded to [0,0,1], so it would return 0. With soft voting, it would take the average of [.3,.4,.9], which is .53, which rounds to 1. So the two methods can return different answers.

To emulate the soft voting case, I flipped a percentage of the bits, as before. Then I replaced every 0 with a number chosen randomly from the uniform distribution from [0,.5] and every 1 with a sample from [.5,1]. The values will still round to what they did before, but there's additional noise on top.

In this simulation (3 classifiers), the soft voting ensemble gives less of a boost than the hard voting ensemble -- about half the benefits. As with hard voting, the more accurate the individual classifiers are, the bigger the boost the ensemble gives.

| Classifier Accuracy |

Ensemble Accuracy |

| 55% |

56% |

| 60% |

62% |

| 70% |

74% |

| 80% |

85% |

Discussion

Let's say a classifier returns .21573 and I round that down to 0. How much of the .21573 that got lost was noise, and how much was signal? If a classification task is truly binary, it could be all noise. Let's say we're classifying numbers as odd or even. Those are unambiguous categories, so a perfect classifier should always return exactly 0 or 1. It shouldn't say that three is odd, with 90% confidence. In that case, it clearly means the classifier is 10% wrong. There's no good reason for uncertainty.

On the other hand, say we're classifying whether photos contain a cat or not. What if a cat is wearing a walrus costume in one of the photos? Shouldn't the classifier return a value greater than 0 for the possibility of it not being a cat, even if there really is a cat in the photo? Isn't it somehow less cat-like than another photo where it's not wearing a walrus costume? In this case, the .21573 at least partially represents signal, doesn't it? It's saying "this is pretty cat-like, but not as cat-like as another photo that scored .0001".

When I'm adding noise to emulate the soft voting case, is that fair? A different way of fuzzing the numbers (selecting the noise from a non-uniform distribution, for instance) might reduce the gap in performance between hard and soft voting ensembles, and it would probably be more realistic. But the point of a model like this is to show the extremes -- it's possible that hard voting will give better results than soft voting, so it's worth testing.

Big Takeaways

- Ensembles aren't magic; they can only improve things significantly if the underlying classifiers are diverse and fairly accurate.

- Hard and soft voting aren't interchangeable. If there's a lot of random noise in the responses, hard voting is probably a better option, otherwise soft voting is probably better. It's definitely worth testing both options when building an ensemble.

- Anyone thinking of using an ensemble should look at the amount of correlation between the responses from different classifiers. If the classifiers are all making basically the same mistakes, an ensemble won't help regardless of hard vs. soft voting. If models with very different architectures are failing in the same ways, that could be a weakness in the training data that can't be fixed by an ensemble.

References

[1] Bonab, Hamed; Can, Fazli (2017). "Less is More: A Comprehensive Framework for the Number of Components of Ensemble Classifiers". arXiv:1709.02925

Tsymbal, A., Pechenizkiy, M., & Cunningham, P. (2005). Diversity in search strategies for ensemble feature selection. Information Fusion, 6(1), 83–98. doi:10.1016/j.inffus.2004.04.003

May 16, 2025

(The code used, and ipython notebooks with a fuller investigation of the data is available at https://github.com/csdurfee/hot_hand.)

Streaks

When I'm watching a basketball game, sometimes it seems like a certain player just can't miss. Every shot looks like it's going to go in. Other times, it seems like they've gone cold. They can't get a shot to go in no matter what they do.

This phenomenon is known as the "hot hand" and whether it exists or not has been debated for decades, even as it's taken for granted in the common language around sports. We're used to commentators saying that a player is "heating up", or, "that was a heat check".

As a fan of the game, it certainly seems like the hot hand exists. If you follow basketball, some names probably come to mind. JR Smith, Danny Green, Dion Waiters, Jamal Crawford. When they're on, they just can't miss. It doesn't matter how crazy the shot is, it's going in. And when they're cold, they're cold.

It's a thing we collectively believe in, but it turns out that there isn't clear statistical evidence to support it.

We have to be careful with our feelings about the hot hand. It certainly feels real, but that doesn't mean that it is. Within the drama of a basketball game, we're inclined to notice and assign stories to runs of makes or misses. Just because we notice them, that doesn't mean they're significant. This is sometimes called "the law of small numbers" -- our brains have a tendency to reach spurious conclusions from a very small amount of data.

Pareidolia is the human tendency to see human faces in inanimate objects -- clouds, the bark of a tree, a tortilla. While the faces might seem real, they are just a product of our brain's natural inclination to identify patterns. It's possible the "hot hand" is a similar phenomenon -- a product of the way human brains are wired to see patterns, rather than an objective truth.

Defining Streakiness

Streaks of 1's and 0's in randomly generated binary data follow regular mathematical laws, ones our brains can't realy replicate. Writer Joseph Buchdal found that he couldn't create a random-looking sequence by hand that would fool a statistical test called the Wald-Wolfowitz test, even though he knew exactly how the statistical test worked.

I think at some level, we're physically incapable of generating truly random data, so it makes sense to me that our intuitions about randomness are a little off. Our brains are wired to notice the streaks, but we seem to have no such circuitry for noticing when something is a little bit too un-streaky. Our brains are too quick to see meaningless patterns in small amounts of data, and not clever enough to see subtle, meaningful patterns in large amounts of data. Good thing we have statistics to help us escape those biases!

For the sake of this discussion, a streak starts whenever a sequence of outcomes changes from wins (W) to losses (L), or vice-versa. (I'm talking about makes and misses, but those start with the same letter, so I'll use "W" and "L".)

The sequence WLWLWL has 6 streaks: W, L, W, L, W, L

The sequence WWLLLW has 3 streaks: WW, LLL, W

Imagine I asked someone to produce a random-looking string of 3 W's and 3 L's. If they were making the results up, I think the average person would be more likely to write the first string. It just looks "more random", right?

If they flipped a coin, it would be more likely to produce something with longer streaks, like the second example. With a fair coin, both of those exact sequences are equally likely to occur. But the second sequence has a more probable number of streaks, according to the Wald-Wolfowitz Runs Test. The expected number in 3 wins and 3 losses is (2 * (3 * 3) / (3+3)) + 1 = 4.

The expected number of streaks is the harmonic mean of the number of wins and the number of losses, plus one. Neat, right?

Around 500 players attempted a shot in the NBA this season. Let's say we create a custom coin for each player. It comes up heads with the same percentage as the player's shooting percentage on the season. If we took those coins and simulated every shot in the NBA this season, some of the coins would inevitably appear to be "streakier" than others.

Players never intend to miss shots, yet most players shoot around 50%, so there has to be some element of chance as far as which shots go in or not. Otherwise, why wouldn't players just choose to make all of them?

So makes versus misses are at least somewhat random, which means if we look at the shooting records of 500 players in an NBA season, some will seem more or less consistent due to the laws of probability. That means a player with longer or shorter streaks than expected could just be due to chance, not due to the player actively doing something that makes them more streaky.

The Lukewarm Hand

We might call players who have fewer streaks than expected by chance consistent. Maybe they go exactly 5 for 10 every single game, never being especially good or especially bad. Or maybe they go 1 for 3 every game, always being pretty bad.

But that feels like the wrong word, and I don't think our brains aren't really wired to notice a player that has fewer streaks than average. As we already saw, the "right" number of streaks is counterintuitive.

I might notice a player is unusually consistent after the fact when looking at their basketball-reference page, but the feeling of a player having the hot hand is visceral, experienced in the moment. Even without consulting the box score, sometimes players look like they just can't miss, or can't make, a shot. They seem more confident, or their shot seems more natural, than usual. Both the shooter and the spectator seem to have a higher expectation that the shot will go in than usual. The hot hand is a social phenomenon.

(from https://xkcd.com/904/)

If we look at the makes and misses of every player in the league, do they look like the results of flipping a coin (weighted to match their shooting percentage), or is there a tendency for players to be more or less streaky than expected by chance?

We don't really have a formal word for players who are less streaky than they should be, so I'm going to call the opposite of the hot hand the lukewarm hand. While the lukewarm hand isn't a thing we would viscerally notice the way we do the hot hand, it's certainly possible to exist. And it's just as surprising, from the perspective of treating basketball players like weighted coins.

Some people I've seen analyze the hot hand treat the question as streaky versus non-streaky. But it's not a binary thing. There are two possible extremes, and a region in between. It's unusually streaky versus normal amount of streaky versus unusually non-streaky.

The Wald-Wolfowitz test says that the number of streaks in randomly-generated data will be normally distributed, and gives a formula for the variance of the number of streaks. The normal distribution is symmetrical, so there should be as many hot hand players as lukewarm hand ones. Players have varying numbers of shots taken over the course of the season so we can't compare them directly, but we can calculate the z score for each player's expected vs. actual number of streaks. The z score represents how "weird" the player is. If we look at all the z-scores together, we can see whether NBA players as a whole are streakier or less streaky than chance alone would predict. We can also see if the outliers correspond to the popular notions of who the streaky shooters in the NBA are.

Simplifying Assumptions

We should start with the assumption that athletes really are weighted random number generators. A coin might have "good days" and "bad days" based on the results, but it's not because the coin is "in the zone" one day, or a little injured the next day. At least some of the variance in a player's streakiness is due to randomness, so we have to be looking for effects that can't be explained by randomness alone.

So I am analyzing all shots a player took, across all games. This could cause problems, which I will discuss later on, but splitting the results up game-by-game or week-by-week leads to other problems. Looking at shooting percentages by game or by week means smaller sample sizes, and thus more sampling error. It also means that comparisions between high volume shooters and low volume shooters can be misinterpreted. The high volume shooters may appear more "consistent" simply because it's a larger sample size.

I think I need to prove that streakiness exists before making assumptions about how it works. Let's say the "hot hand" does exist. If a player makes a bunch of shots in a row, how long might they stay hot? Does it last through halftime? Does it carry over to the next game? How many makes in a row before they "heat up"? How much does a player's field goal percentage go up? Does a player have cold streaks and hot streaks, or are they only streaky in one direction?

There are an infinite number of ways to model how it could work, which means it's ripe for overfitting. So I wanted to start with the simplest, most easily justifiable model. The original paper about the hot hand was co-written by Amos Tversky, who went on to win a Nobel Prize for helping to invent behavioral economics. I figure any time you can crib off of a Nobel Prize winner's homework, you probably should!

Results

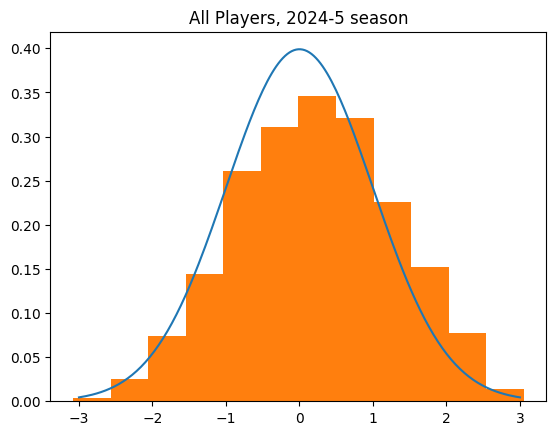

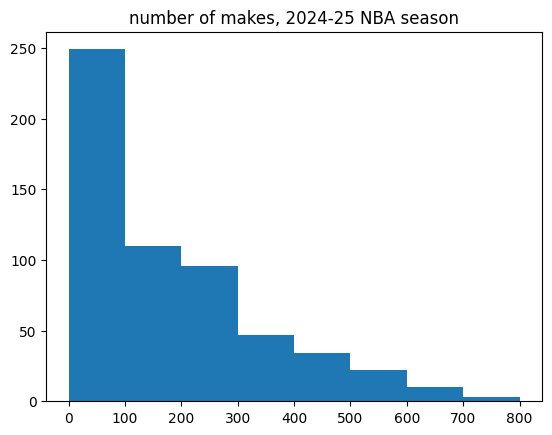

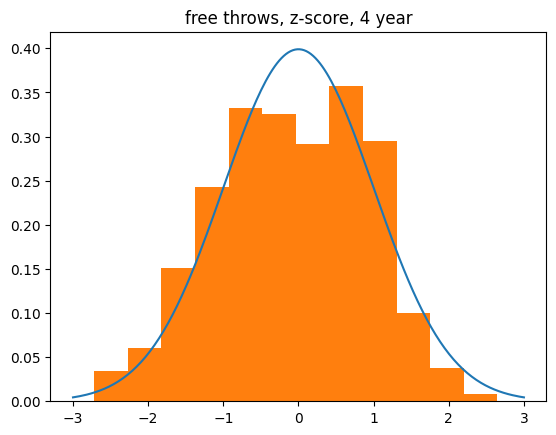

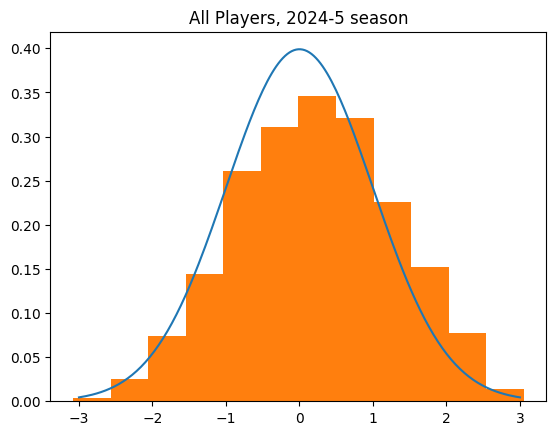

I started off by getting data on every shot taken in the 2024-25 NBA regular season. I calculated the expected number of streaks and actual number, then a z-score for every player.

Players with a z-score of 0 are just like what we'd expect from flipping a coin. A positive z-score indicates there were more streaks than expected. More streaks than expected means the streaks were shorter than expected, which means less streaky than expected.

A negative z-score indicates the opposite. Those players had fewer streaks than expected, which means the streaks were longer. When people talk about the "hot hand" or "streaky shooters", they are talking about players who should have a negative z-score by this test.

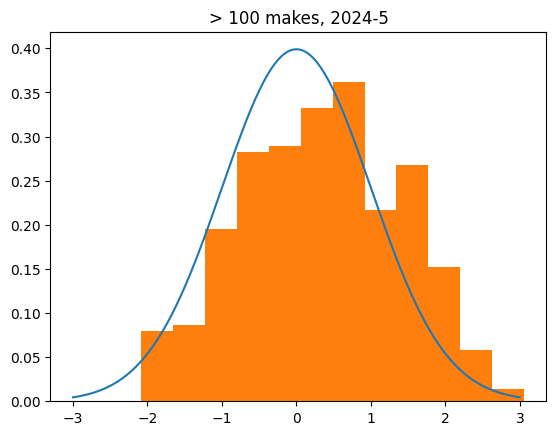

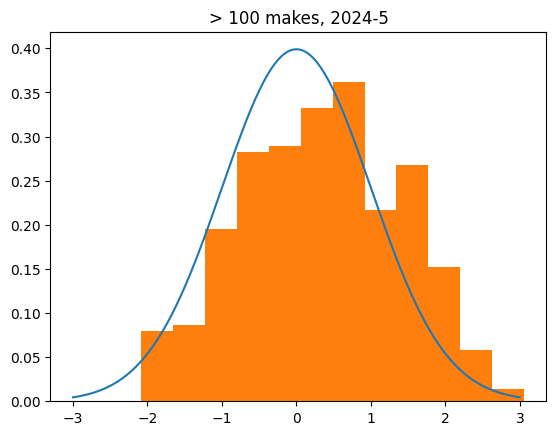

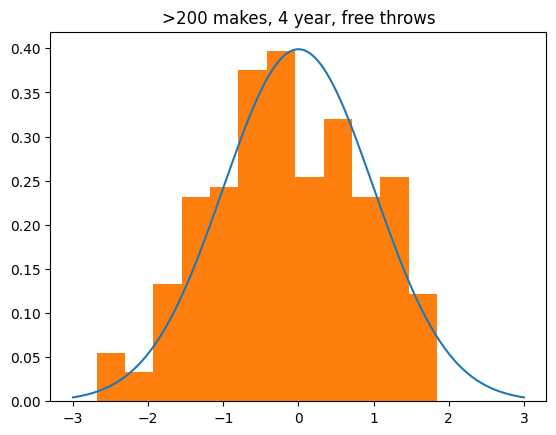

The curve over the top is the distribution of z-scores we'd expect if the players worked like weighted coin flips.

Just eyeballing it, it's pretty close. It's definitely a bell curve, centered pretty close to zero. If there is a skew, it's actually to the positive, un-streaky side, though. The mean z-score is .21, when we'd expect it to be zero.

count 554.000000

mean 0.212491

std 1.075563

min -3.081194

25% -0.546340

50% 0.236554

75% 0.951653

max 3.054836

The Wilk-Shapiro test is way to decide whether a set of data plausibly came from a normal distribution. It passed. There is no conclusive evidence that players in general are streakier or less streaky than predicted by chance. This data very well could've come from flipping a bunch of coins.

But it's still sorta skewed. There were 320 players with a positive z-score (un-streaky) versus 232 with a negative z-score (streaky). That's suspicious.

Outliers

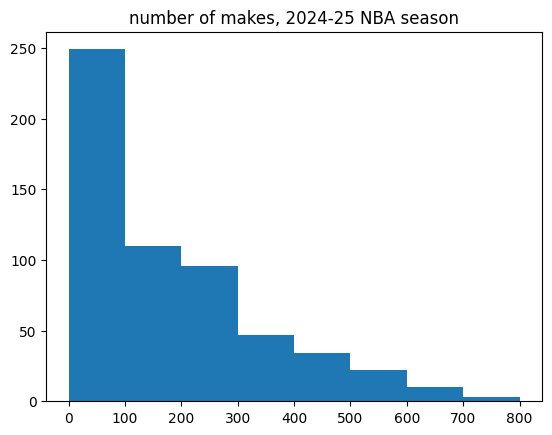

A whole lot of those 554 players didn't make very many shots.

I decided to split up players with over 100 makes versus under 100 makes. Unlike high volume shooters, the low volume shooters had no bias towards unstreakiness. They look like totally random data.

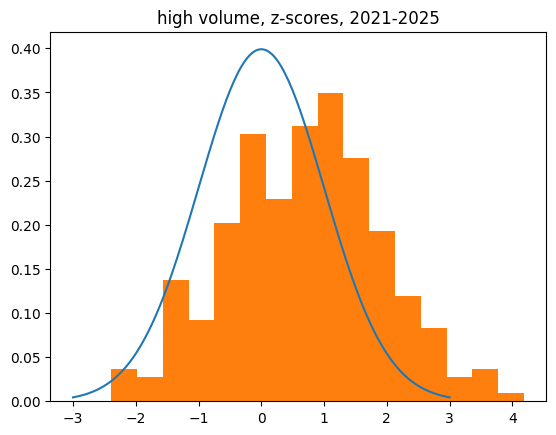

Here are just the high volume shooters (323 players in total). Notice how none of them have a z-score less than about -2. It should be symmetrical.

count 323.000000

mean 0.347452

std 1.068341

min -2.082528

25% -0.454794

50% 0.363949

75% 1.091244

max 3.054836

The Eye Test

I looked at which players had exceptionally high or low z scores. The names don't really make sense to me as an NBA fan. There were players like Jordan Poole and Jalen Green, who I think fans would consider streaky, but they had exceptionally un-streaky z-scores. I don't think the average NBA fan would say Jalen Green is less streaky than 97.5% of the players in the league, but he is (by this test).

On the other hand, two streakiest players in the NBA this year were Goga Bitadze and Thomas Bryant, two players who don't fit the profile of the stereotypical streaky shooter by any means.

Makes vs. Streakiness

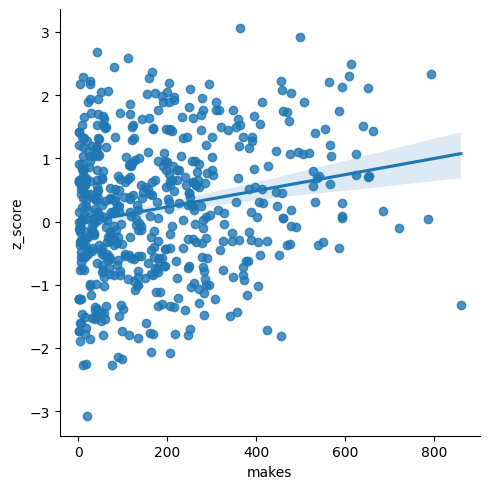

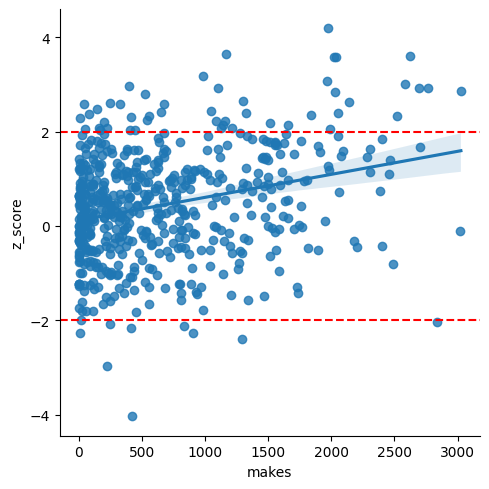

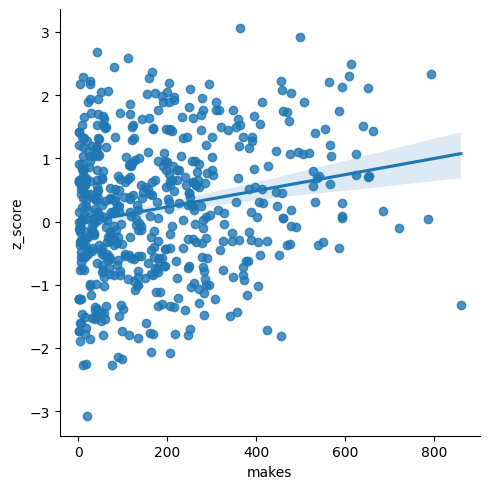

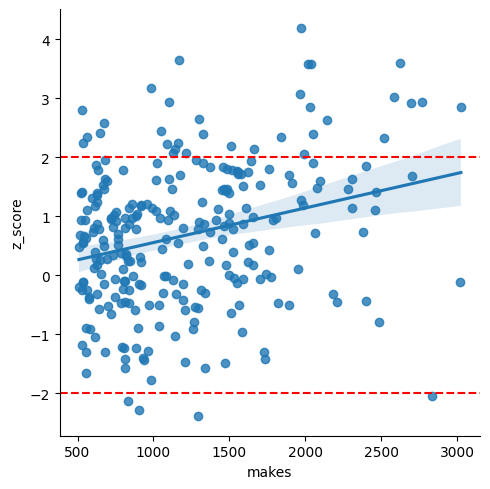

The more shots a player made this season, the more un-streaky they tended to be. Here's a plot of makes on the 2024-25 season versus the z-score.

That's pretty odd, isn't it?

Getting more data: 2021-present

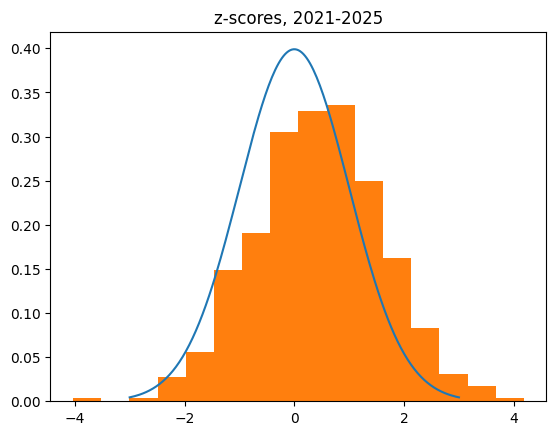

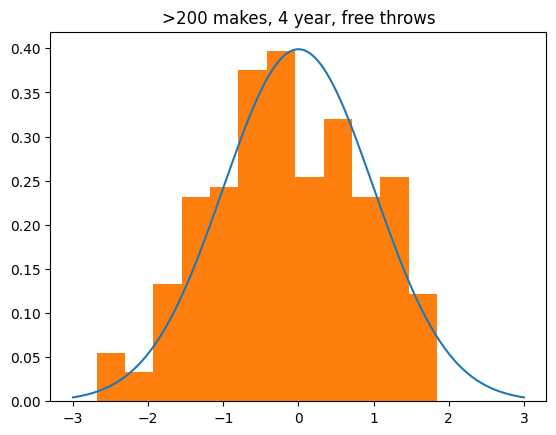

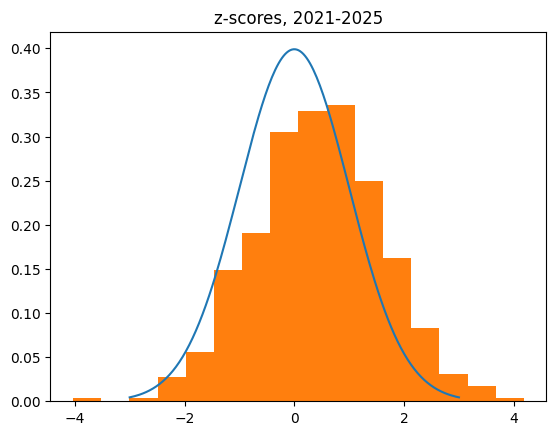

I figured a bigger sample size would be better. Maybe this season was just weird. So got the last 4 seasons of data (2021-22, 2022-2023, 2023-2024, 2024-2025) for players who made a shot in the NBA this season and combined them.

The four year data is even more skewed towards the lukewarm hand, or un-streaky side, than the single year data.

count 562.000000

mean 0.443496

std 1.157664

min -4.031970

25% -0.312044

50% 0.449647

75% 1.184918

max 4.184025

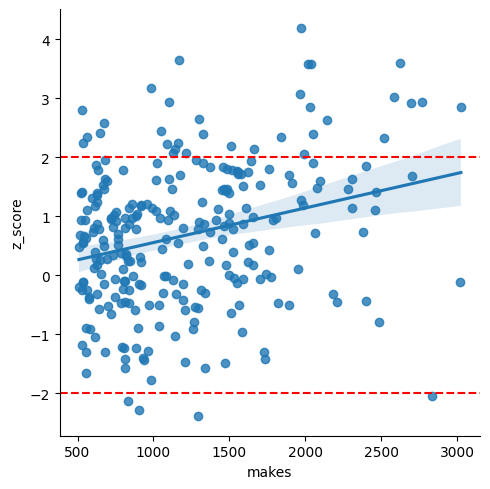

The correlation between number of makes and z-score is quite strong in the 4 year data:

There were 48 players with a z-score > 2, versus only 9 with a score < -2. That's like flipping a coin and getting 48 heads and 9 tails. There's around a 2 in 10 million chance of that happening with a fair coin.

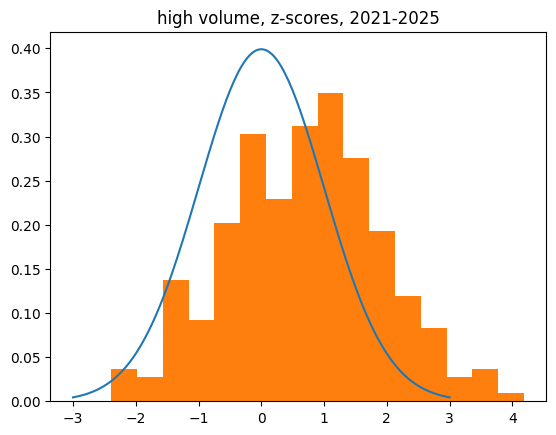

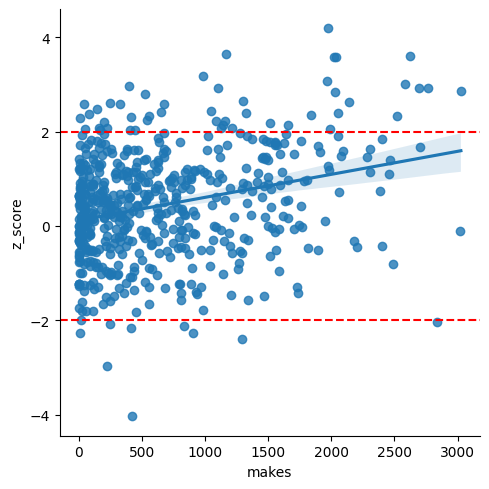

High Volume Shooters, Redux

The bias towards the lukewarm hand is even stronger among high volume shooters. Here are players with more than 500 makes over the past 4 years.

The z-scores are normally distributed according to the Wilk-Shapiro test, but they're no longer even close to being centered at zero. They're also overdispersed (the std is bigger than the expected 1.) It's not plausible that the true mean is 0, given the sample mean is .680.

count 265.000000

mean 0.680097

std 1.217946

min -2.392061

25% -0.149211

50% 0.776917

75% 1.485595

max 4.184025

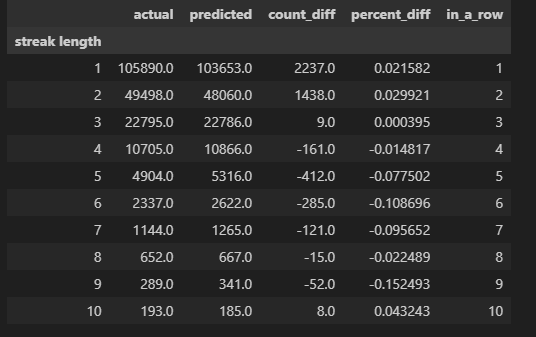

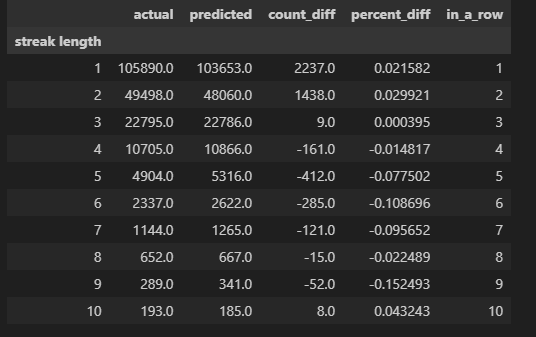

Streak Lengths

I looked at the length of make/miss streaks for the actual NBA players versus simulating the results. The results were simulated by taking the exact number of makes and misses for each NBA player, and then shuffling those results randomly. What I found confirmed the "lukewarm hand" -- overall, NBA players have slightly more 1 and 2 shot streaks than expected, and fewer long streaks than expected.

Obvious objections, and what about free throws?

I'm treating every field goal attempt like it has the same chance of going in. Clearly that's not the case. Players, especially high volume scorers, can choose which shots they take. It's easy to imagine a player that has missed several shots in a row and is feeling "cold" would concentrate on only taking higher percentage shots. There's also the fact that I'm combining games together. That could potentially lead to players looking less streaky than they are within the course of a single game. But it should also make truly unstreaky players look less unstreaky. Streaks getting "reset" by the end of the game should make players act more like a purely random process -- not too streaky or unstreaky. It shouldn't increase the standard deviation of the z-scores like we're seeing, or cause a shift towards unstreakiness.

I may do a simulation to illustrate that, but in the meantime, the most controlled shot data we have is free throw data. Every free throw should have exactly the same level of difficulty for the player.

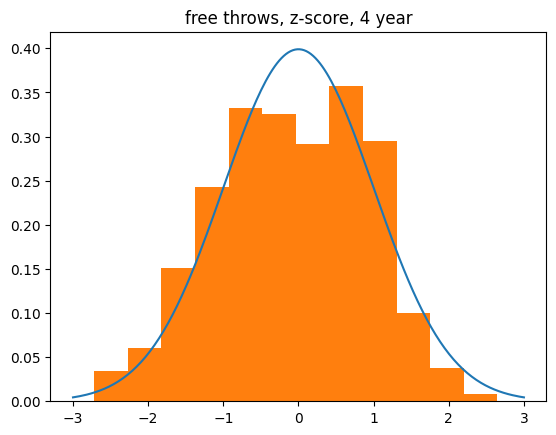

I got the data for the 200,000+ free throws in the NBA regular season over the past four years (October 2021 through April 2025).

Here are the z-scores for all players. There's a big chunk taken out of the middle of the bell curve, but it's normal-ish other than that.

240 players have made over 200 free throws in the past 4 years. When I restrict to just those players, there's a slight skew towards the "hot hand", or being more streaky than expected. There are no exceptionally lukewarm hands when it comes to free throws. It's sort of the mirror image of what we saw with high volume field goal shooters.

count 240.000000

mean -0.144277

std 1.021330

min -2.686543

25% -0.854723

50% -0.174146

75% 0.660302

max 1.845302

Conclusions, for now

I feel comfortable concluding that the hot hand doesn't exist when it comes to field goals. I can't say why there's a tendency towards unstreakiness yet, but I suspect it is due to shot selection. Players who have made a bunch of shots may take more difficult shots than average, and players who have missed a bunch of shots will go for an easier shot than average. While players can't choose when to "heat up" or "go cold", they can certainly change shot selection based on their emotions or the momentum of the game.

There may be a slight tendency towards the hot hand when it comes to free throws. It's worth investigating further, I think. But the effect there doesn't appear to be nearly as strong as the lukewarm hand tendency for field goals.

May 01, 2025

What geology can tell us about Kevin Durant's next team

When NBA superstar Kevin Durant left the Oklahoma City Thunder to join the Golden State Warriors, he said that doing so was taking "the hardest road". This was met with a lot of mockery, because the Golden State Warriors had just won 73 games, the most in NBA history, the previous year.

It was widely regarded as an uncool move, ring chasing, the ultimate bandwagon riding. It was clearly an absurd thing to say about the level of challenge he chose. It also made the NBA less interesting for several years, so he deserved some hate for it.

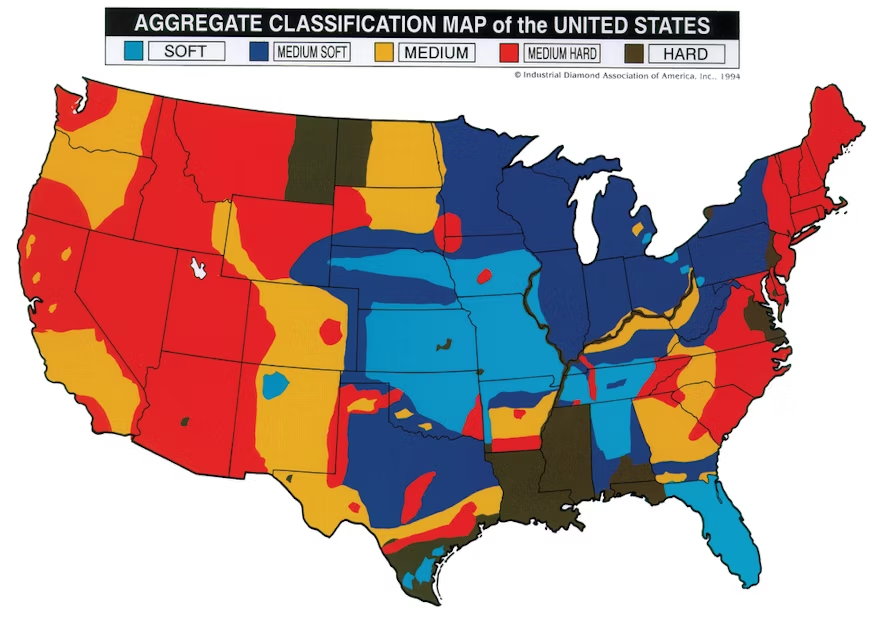

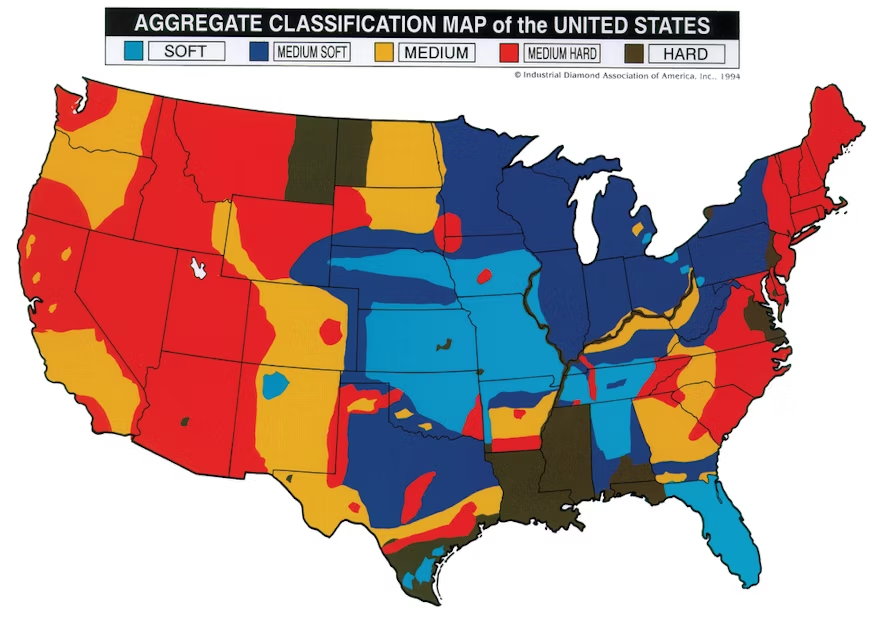

What people missed was that according to geology, he wasn't totally off-base. Streets are paved with asphalt, which is a combination of local rocks (aggregate) and tar. That means that some regions of America have harder roads than others, based on the local geology:

(source: https://www.forconstructionpros.com/equipment/worksite/article/10745911/aggregate-hardness-map-of-the-united-states)

(source: https://www.forconstructionpros.com/equipment/worksite/article/10745911/aggregate-hardness-map-of-the-united-states)

Oklahoma City is located right in the center of Oklahoma, with some of the softest aggregate in the United States. It's reasonable for someone who cares about road hardness to want to leave. Just about anywhere (except for Florida) would have been an improvement.

The "hardest road" out of OKC at that time would've been the one to New Orleans. It's about a 700 mile drive, and it looks like it's a nice gradient from some of the softest roads in the United States to the very hardest ones.

The New Orleans Pelicans at the time were pretty bad, basically just Anthony Davis, a couple good role players (Ryan Anderson, Jrue Holiday), and a rich collection of "Let's Remember Some Guys" Guys (Jimmer Fredette, Nate Robinson, Luke Babbitt, Ish Smith, Alonzo Gee, Norris Cole). KD and AD on the same team would have been cool, but even with Kevin Durant, the Pelicans would likely have been pretty bad. Certainly worse than the OKC team that Durant wanted to leave.

Although technically the "hardest road" out of Oklahoma City, going to New Orleans would have been a poor career choice for KD. The Pelicans have always been a cheap, poorly run team. I can't imagine it being a destination for any free agent of Kevin Durant's caliber.

He really should have said "I'm taking the hardest road that doesn't lead to a mismanaged tire fire of a team. Also by "hardest" I mean on the Mohs scale, not the challenge" and everybody would have understood.

Northern California has a medium-hard substrate, so his choice to go to the Warriors was definitely a harder road than a lot of other places he could have gone. Since leaving the Warriors, he's played for two other teams with medium-hard roads: the Brooklyn Nets and the Phoenix Suns. He's never chosen to take a softer road. Give him credit for that.

Now that there are rumors about Kevin Durant being traded from the Suns, what can geology tell us about Durant's next destination?

The other NBA cities with medium-hard to hard roads are New Orleans, Boston, Charlotte, Houston, New York, Sacramento, Utah, and Washington DC. He's from DC so that might be nice. But Durant always says he wants to compete for a championship. So we can rule them out, as well as New Orleans, Charlotte and Utah.

I can't really see Boston or New York wanting to tweak their rosters too much, because they're both already good enough to win a championship and don't have a lot of tradeable assets. Sacramento's not a great fit. The Kings would be dreadful on defense, and have too many players who need the ball at once.

That leaves Houston. Durant would fix the Rockets' biggest weakness -- not having a go-to scorer -- and Houston could surround him with a bunch of guys who can play defense. Most importantly, he'd get to continue to drive on medium-hard roads.

Kevin Durant to the Houston Rockets. The geology doesn't lie.

(

(