Aug 08, 2025

Song: The Impressions, "Do You Wanna Win?"

(This is an excerpt from a larger project about sports gambling. Code used, and early drafts of some of the chapters can be found at https://github.com/csdurfee/book.)

I'm going to return to the subject of sports betting this week. Let's start with something easy. How do you avoid going broke betting on sports? That's easy. Reduce your bet size to zero. Scared money don't lose none.

As long as there is randomness, there will be outliers and unexpected results. It is impossible to escape randomness in sports betting. Any time you decide to bet, you enter the kingdom of randomness and have to abide by its laws. It doesn't matter whether you have an advantage over the house (unless the advantage is truly massive). Nothing is guaranteed.

This is a pretty hard thing for us to know how to deal with, when our brains are pattern-finding machines. Our brains will find patterns to give us a sense of control.

Notes

I talk about "win rate" a bunch below. That means the percent of the time a gambler can win bets at even odds (such as a standard spread bet on the NBA or NFL.)

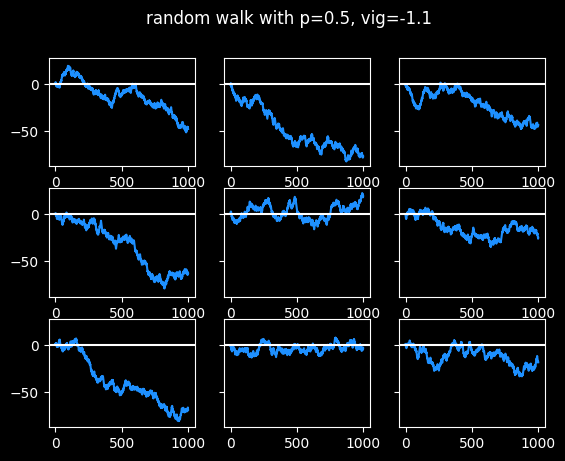

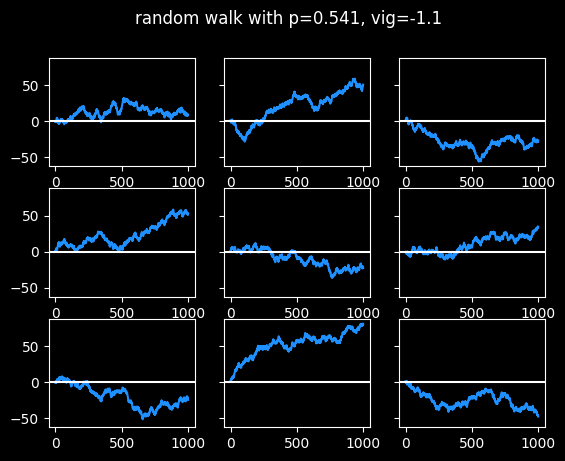

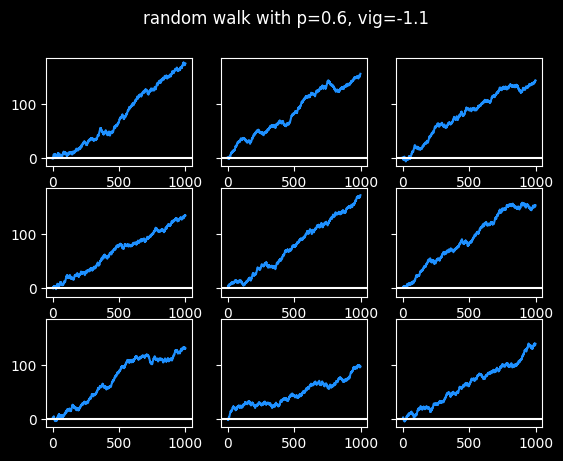

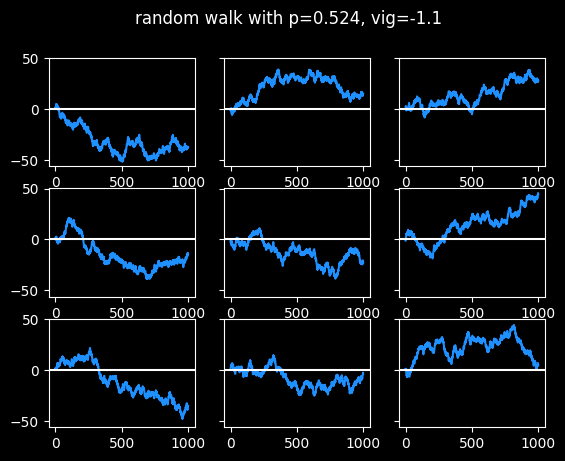

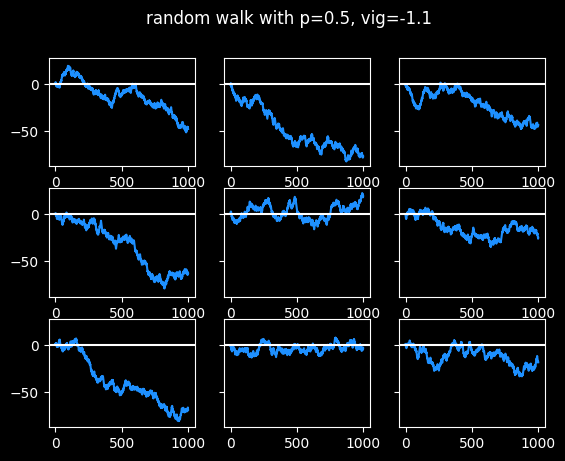

The random walks shown below are a little different from a standard one, because I'm simulating the vig. The walker goes 1 block north when the coin comes up heads (or they win the bet), and 1.1 blocks south when the coin comes up tails (or they lose the bet.)

In the marches of madness

The NCAA holds the March Madness tournament every year to determine who the best college basketball team is. It's a single elimination tournament of 64 teams, arranged into a big bracket.

Say we do a March Madness style bracket with coin flippers instead of basketball teams. We randomly assign them places in the bracket. For each matchup, the coin flipper at the top of the matchup flips a coin. If they get heads, they survive and advance. If they get tails, they lose.

Somebody's going to go 6-0 and win that tournament.

Now imagine we expanded that to every single person on the planet. Every single person gets matched up into a 64 person bracket, then each of those winners get added to another 64 team bracket, and so on.

Eventually, someone has to emerge the victor with a 32-0 record or something -- the greatest coin flipper in the world. Right?

Random walks

Imagine going on a walk. Every time you get to an intersection, you flip a coin. If it's heads, you go one block north and one block east. If it's tails, you go one block south and one block east. This is called a random walk. It's a bit like a gambler's profits or losses plotted on a graph as a function of time.

I think there's a huge value in knowing what random walks look like. Do they remind you of anything?

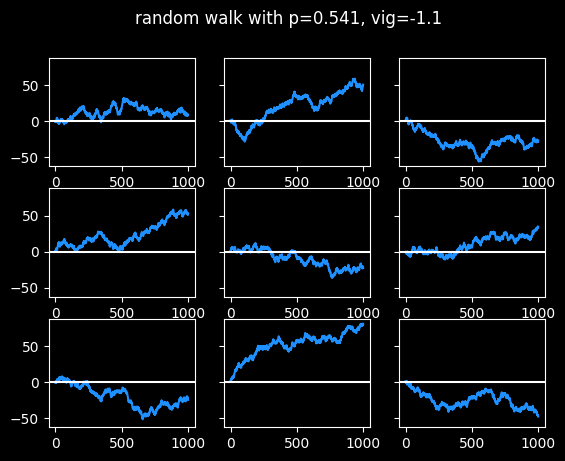

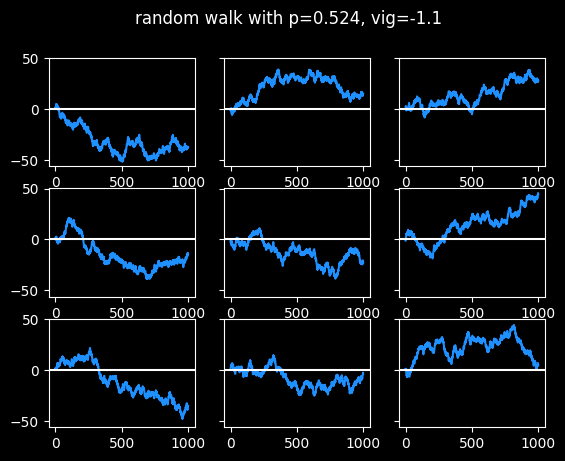

Touts are people who sell recommendations about which bets to take. Pay $30 and they'll tell you which side to bet on the big game tonight. (I have a whole section about touts in the book.) The best tout I could find has had pretty steady success for nearly 20 years, with a win rate of 54.1% against the standard vig. That's statistically significant, but it's not enough to guarantee success moving forwards. Even if they continue to place bets that should win 54.1% of the time -- they're flipping a slightly biased coin -- that doesn't mean they'll make money on those bets. Let's look at some random walks at 54.1% win rate:

Three of the random walks ended up around 50 units after 1000 bets, which is pretty good. Two of them made a tiny bit of money. The other four lost money. This is a small sample size, but a 44% chance of losing money after paying for 1,000 picks at $30 a pop isn't great. (More info on the economics of touts in the book.)

The touts I looked at generally don't sell picks for every single game. So with 1200 games in an NBA season, this could be several years' worth of results.

Imagine all of these as 9 different touts, with the exact same level of skill at picking games. But some of them look like geniuses, and some look like bozos. There would probably be some selection bias. The ones with the bad records would drop out -- who's buying their picks if they're losing money? And the ones who did better than their true skill level of 54.1% would be more likely to stick with it. Yet all these random walks were generated with the same 54.1% win rate.

If you bought 1,000 picks from this tout, you don't get to choose which of the \(2^{1000} = (2^{10})^{100} \approx 10^{300}\) possible random walks you will actually get. If each bet has a 54.1% chance of winning, there's no guarantee you will have exactly 541 wins and 459 losses at the end of 1000 bets.

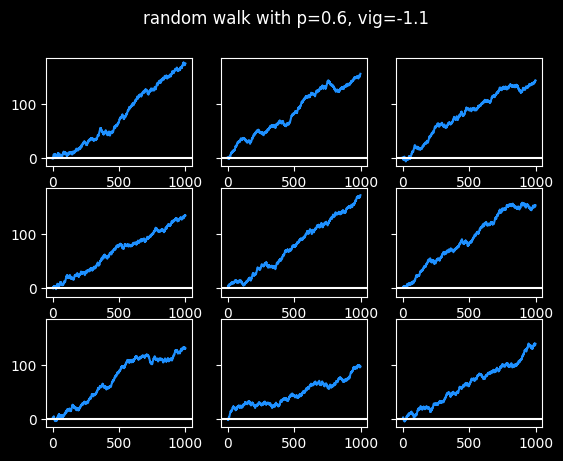

Here's what someone who is right 60% of the time looks like.

Success is pretty boring.

I've always said it's much harder to learn from success than from failure. At a 60% win rate, none of them really have long cold streaks, just small breaks between hot streaks. It wouldn't be interesting to tell stories about those graphs. There's nothing to learn, really.

I don't think it's possible to win 60% against the spread, based on what are called push charts, discussed in the book.

The graphs at the 54.1% success rate appear a lot more human. They have hot and cold streaks, swoons, periods where they seem stuck in a range of values. Some of them scuffled the whole time, a couple finally got locked in near the end, a couple were consistently good. Some had good years, some had bad years. Even though they are randomly generated, they look like they have more to teach us, like they offer more opportunities to tell stories. But they all have the exact same win rate, or level of skill at handicapping.

No outcome is guaranteed, but the higher the win rate, the more consistently the graph is going to go up and to the right at a steady pace.

Finally, here are some walks at 52.4% win rate, the break-even point. Most results end up close to zero after 1000 bets, but there is always a possibility of an extended run towards the positive or negative side -- it happened 4/9 times in this sample:

The Axe Forgets, The Tree Remembers

If those 9 graphs were stock prices, which one would you consider the best investment?

Well, we know they're equally bad investments. They're winning just enough to break even, but not make profits.

They all have the same Expected Value moving forwards. Previous results are meaningless and have no bearing on whether the next step will be up or down. Every step is the start of an entirely new random walk. The coin doesn't remember what has happened in the past. We do.

This is what's known in math as a Martingale, named after a betting system that was popular in France hundreds of years ago. (I previously talked about Martingales in the series on the hot hand.)

The basic idea behind all these betting systems is to chase losses by betting more when you're losing. Hopefully it's obvious that these chase systems are crazy, though formally proving it led to a lot of interesting math.

Fallacy and ruin

Even though chase systems are irrational, they've persisted through the centuries. Human beings are wired to be semi-rational -- we use previous data to try and predict the future, but we use it even when the data was randomly generated, and even when we don't have a significant amount of data. We need coherent stories to tell about why things happened. There is no rational reason to believe in a chase system, but I think there are semi-rational reasons to fall prey to the gambler's fallacy.

I hope these random walks show that having a modest, plausible advantage over the house isn't a guarantee of success, even over a really long timespan. Positive Expected Value is necessary, but not sufficient, for making money long term.

The vast majority of gamblers bet with negative expected value due to the vig, and possibly biases in the lines against public teams, as we saw in a previous installment. If each bet the average gambler makes has a negative expected value, they can't fix that by betting MORE.

"If I keep doubling down, eventually I'll win it all back." Maybe if you have infinite capital and unlimited time. Otherwise the Gambler's Ruin is certain. The market can stay liquid longer than you can stay irrational.

Maximizing profits: the Kelly criterion

Let's say a bettor really does have an edge over the house -- they can beat the spreads on NBA basketball 56% of the time.

Even with that advantage, it's easy to go broke betting too much at once. Suppose they bet 25% of their bankroll on each bet. What happens after 200 bets? 200 bets is not a lot, roughly 1 month of NBA games if they bet on every game.

Once in a blue moon they end up a big winner, but 53% of the time the gambler is left with less than 5% of their bankroll after 200 bets even though they have a pretty healthy advantage over the house. So it's still a game of chance rather than skill, even though it would require a lot of mental labor to make the picks, and time to actually bet the picks. The mean rate of return is quite impressive (turning $100 into $4,650), but the median result is bankruptcy (turning $100 into $3).

Intuitively, there has to be some connection between the betting advantage and the optimal amount to risk on each bet. If a gambler only has a tiny advantage, they should only be making tiny bets as a percentage of their total bankroll. The better they are, the more they can risk. And if they have no advantage, they shouldn't bet real money at all.

That intuition is correct. The Kelly criterion gives a formula for the exact percent of the bankroll to risk on each bet in order to maximize Expected Value, given a certain level of advantage. https://en.wikipedia.org/wiki/Kelly_criterion

In this case, the Kelly criterion says to bet 7.6% of the total bankroll on each bet. I did 100,000 simulations of a sequence of 200 bets following the Kelly criterion. The gambler only went broke around one time in 1,000, which is much better. The median result was turning $100 into $168, which is pretty good. However, the gambler still lost money 31% of the time.

This is just one month of betting, assuming the gambler bets on every NBA game. Losing money 31% of the time seems pretty high for what's supposed to be the optimal way to bet.

How about a longer period of time? I simulated 1,000 bets this time, nearly a whole season of the NBA. The median outcome is turning $100 into $1356, which is a sweet rate of return. But the chances of going broke actually increased! The player will go broke 1.4% of the time, about 11x more often on 1000 bets than 200, which seems unfair, but the Kelly criterion doesn't make any guarantees about not going broke. It just offers the way to optimize Expected Value if the gambler knows the exact advantage they have over the house.

Partial Kelly Betting

Kelly Betting is the optimal way to maximize profits, but what about lower stakes? The real power of Kelly betting is its compounding nature -- as the bankroll gets bigger or smaller, the bet size scales up or down as well. It also corresponds to our incorrect intuitive understanding of randomness -- it makes sense someone should bet smaller amounts when they're on a cold streak, and larger amounts when they're on a hot streak.

What if the gambler only bets 2% of their bankroll instead of the 7.6% recommended by the Kelly criterion? They don't go broke a single time in 100,000 simulations of 1,000 bets. The mean rate of return is 4.6x and the median is 3.7x. That's a pretty nice return on investment, relative to the risk. The gambler still lost money 2.8% of the time, though. Being conservative, betting a lot of games at positive expected value, and betting the right way greatly increase the chances of success, but nothing can eliminate the possibility of failure.

Imagine doing 1000 bets at 56% win percentage and a conservative bet size, and still losing money. Wild, isn't it? If you take one thing away from this article, it should be:

Failure is always an option.

Betting a constant amount

You wouldn't have that problem with betting a constant amount, right? Say a gambler has a bankroll of $100 and bets to win $20 on each game. 1000 games, 56 win %.

This Expected Value of playing this way doesn't have any randomness in it. It's just a simple algebra problem. According to EV, they should end up with $252 at the end of the season, for a profit of $152. Nice. But as I've mentioned before, EV says nothing about the range of possible outcomes.

If I actually simulate it, a pretty wide range of outcomes are possible. 99% of the time, the gambler makes money, but 6 times out of 100,000, they lose everything and more. (6 in 100,000 is about the same odds as winning a 14 leg parlay.)

With betting a fixed size, the rate of return is lower and the risk of going broke doesn't go away. So it's sub-optimal compared to Kelly-style betting with a very small percentage of the total bankroll.

Oct 19, 2025

Song: The Meters, "People Say"

In a previous installment, I looked at statistics about NBA gambling that I obtained from sportsbookreview.com. I thought it was pretty interesting, but there were some huge gaps in the data, and I wasn't sure what sportsbook some of it was coming from. So I didn't have a ton of confidence in my results.

I thought it was a pretty cool idea, though, so I tracked down a better source of data via Yahoo's NBA pages. Yahoo loads gambling data from the BetMGM sportsbook, and I found a nice internal API for getting it all. I was able to get data on the past four seasons of NBA games, and although data is missing for some games, it's much more complete, and has a bunch more info, than the original dataset.

All code and data is available online at https://github.com/csdurfee/scrape_yahoo_odds/. The explore.ipynb notebook has all the calculations and charts used in this article.

As usual with gambling content, I'm presenting this data because I think it is interesting to study how it works, and what it tells us about basketball and human nature. I don't recommend you bet based on information in this article, or at all.

The data I'm looking at is every regular season and playoff NBA game from the 2021-22, 2022-23, 2023-2024 and 2024-2025 seasons. No preseason games or All-Star games, but the Emirates NBA Cup championship game is included.

There are 435 games that are missing stake/wager data (the percentage of money and bets placed on each side, respectively.) That leaves 4,840 NBA games that I have analyzed. The stake and wager percentages seem to be pretty similar to each other, so I'm just using the wager data.

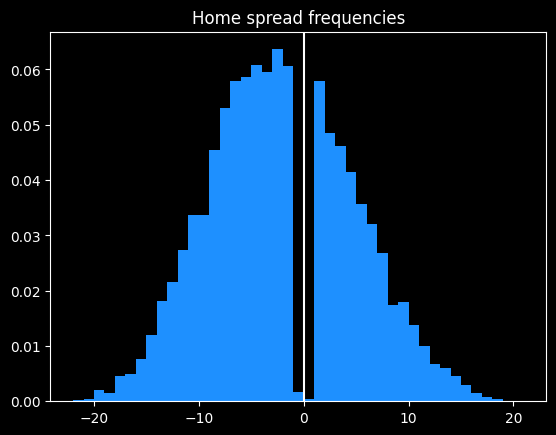

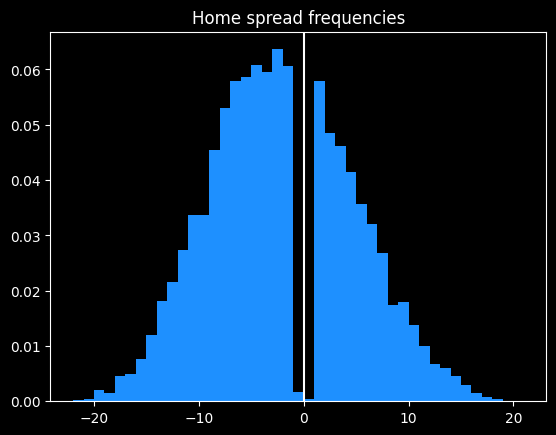

What do the spreads look like? Are there any obvious biases?

The home team went 2393-2447 (49.5%) against the spread over the last 4 years. The favorite team went 2427-2413 (50.1%) against the spread in that time.

The home team was the favorite in 3044/4840 (62.8%) of games. The median spread is the home team -3.5 (favored by 3.5 points). Here are the frequencies:

Basketball games never end in a tie, and there are strategic reasons why NBA games rarely end in a one point difference.

Around half of all home lines are one of -2.5, -1.5, -4.5, -3.5, +1.5, -6.5, -5.5, -7.5 or +2.5 (in descending order of frequency).

98.3% of all MGM lines are on the half point (eg 8.5 instead of 8). This is so the sportsbook can avoid pushes (ties), where they have to give everybody's money back.

More evidence of the folly of crowds

First, some betting jargon, if you're new here. Teams that are popular with bettors are known as public teams. The side of a particular bet that gets more action is known as the public side. If that side of the bet won, people say the public won. Betting the less popular side is known as fading the public. When the less popular side wins against the spread, people will say Vegas won or sharps won (a sharp is someone who is good at betting, as opposed to a square, who is bad at betting.)

Looking at the stake/wager data, we can find out which team was more popular to bet on for each game. How do those public teams do collectively? Are the public any better or worse at making picks than just flipping a coin?

Due to well-earned cynicism, I was expecting that the public would be a bit worse than a coin flip. And they are. The public went 2381-2459 (49.19%) against the spread over the past 4 years.

The public greatly prefer betting on the away team. 2742/4840 (56.7%) of public bets are on the away team. The public also greatly prefer favorites. 2732/4840 (56.4%) of bets are on them.

Here's how the bets break down:

|

favorite |

dog |

| AWAY |

1215 |

1527 |

| HOME |

1517 |

581 |

This crosstab is a little misleading. The home team is usually favored, so there are fewer home underdogs than road underdogs. However, there's still a discrepancy. The home team is the underdog 37% of the time, but only 27% of bets on underdogs are on the home team, and 28% of bets on the home team are on underdogs.

Of the 4 categories, the only one with a winning record for the public is away favorites -- 623-592 (51.3% win rate). The second best type is home underdogs, which went 289-292 (49.7%).

The two most popular bet types with the public are big losers. Away underdogs went 742-785 (48.6%), and home favorites went a putrid 727-792 (47.9%).

Overall, someone taking the public side of every bet over the past 4 years would have lost 324 units. If they bet $100 on every game, they would be down $32,400. Someone taking the opposite side of all these bets (fading the public) would "only" lose 160 units, or $16,000.

It would've been profitable betting reduced juice (risk 106 to win 100 instead of 110) to fade the public on all away underdog and home favorite picks. The problem with that strategy is that reduced juice sportsbooks actively attract smart players, so they have sharper (more accurate) lines. A retail sportsbook like BetMGM doesn't need sharp lines because they ban any players who win too much. Against sharp lines, I wouldn't expect the strategy to be profitable.

How have teams done against the spread over the past 4 years?

Over the past 4 seasons, team records against the spread appear fairly random. The only real outlier is the Oklahoma City Thunder, who have won an astounding 59.3% of their games against the spread. This is remarkable to me because they've been one of the best and most hyped teams in basketball the past couple years. They dominated the league last season, winning 68/82 (82.9%) games, and the NBA Championship. They're not exactly an under the radar team. Yet they appear to have been underestimated by both Vegas and the betting public.

|

|

| Oklahoma City |

0.593 |

| Toronto |

0.544 |

| Boston |

0.54 |

| Memphis |

0.529 |

| Orlando |

0.528 |

| Chicago |

0.516 |

| Dallas |

0.515 |

| Cleveland |

0.508 |

| New York |

0.504 |

| Golden State |

0.504 |

| Indiana |

0.503 |

| Houston |

0.502 |

| LA Lakers |

0.5 |

| Miami |

0.499 |

| Philadelphia |

0.498 |

| Minnesota |

0.496 |

| Detroit |

0.495 |

| Utah |

0.492 |

| Denver |

0.491 |

| Sacramento |

0.49 |

| LA Clippers |

0.489 |

| San Antonio |

0.488 |

| Charlotte |

0.487 |

| Milwaukee |

0.484 |

| Brooklyn |

0.483 |

| Portland |

0.477 |

| New Orleans |

0.476 |

| Phoenix |

0.471 |

| Atlanta |

0.442 |

| Washington |

0.439 |

While it's not impossible OKC's record against the spread is due to chance alone, a 59.3% winning percentage over 332 games seems like a failure of the market.

Who are the most common favorites?

Boston has been the most dominant, being favored in 319/361 (88.4%) of their games over the past four seasons, followed by Milwaukee, Denver and Golden State.

Detroit, Charlotte, Washington and San Antonio were the most common underdogs.

|

|

| Boston |

0.884 |

| Milwaukee |

0.734 |

| Denver |

0.711 |

| Golden State |

0.707 |

| Cleveland |

0.706 |

| Phoenix |

0.691 |

| Miami |

0.608 |

| Minnesota |

0.595 |

| LA Clippers |

0.588 |

| New York |

0.572 |

| Philadelphia |

0.568 |

| Memphis |

0.56 |

| Oklahoma City |

0.557 |

| Dallas |

0.542 |

| Sacramento |

0.535 |

| LA Lakers |

0.509 |

| Atlanta |

0.498 |

| Indiana |

0.456 |

| Chicago |

0.426 |

| Toronto |

0.414 |

| Brooklyn |

0.411 |

| Utah |

0.406 |

| New Orleans |

0.385 |

| Orlando |

0.358 |

| Houston |

0.309 |

| Portland |

0.245 |

| San Antonio |

0.215 |

| Washington |

0.213 |

| Charlotte |

0.194 |

| Detroit |

0.188 |

Who are the most common public teams?

We saw the public likes to bet on the away team, and the favorite. Do they have team-specific tendencies?

They certainly do. The LA Lakers and Golden State Warriors are most popular, being the picked by the public in 72% of their games. Detroit and Orlando are the least popular, only getting the majority of bets in 31% of their games.

|

|

| LA Lakers |

0.716 |

| Golden State |

0.716 |

| Milwaukee |

0.694 |

| Phoenix |

0.607 |

| Denver |

0.603 |

| Dallas |

0.577 |

| Boston |

0.557 |

| Memphis |

0.551 |

| Chicago |

0.545 |

| Indiana |

0.541 |

| Philadelphia |

0.538 |

| Cleveland |

0.52 |

| Miami |

0.519 |

| Utah |

0.518 |

| Oklahoma City |

0.518 |

| Minnesota |

0.504 |

| LA Clippers |

0.502 |

| Brooklyn |

0.497 |

| Atlanta |

0.495 |

| New York |

0.484 |

| San Antonio |

0.436 |

| Washington |

0.425 |

| Sacramento |

0.417 |

| Houston |

0.401 |

| Toronto |

0.382 |

| Charlotte |

0.359 |

| Portland |

0.341 |

| New Orleans |

0.322 |

| Detroit |

0.314 |

| Orlando |

0.311 |

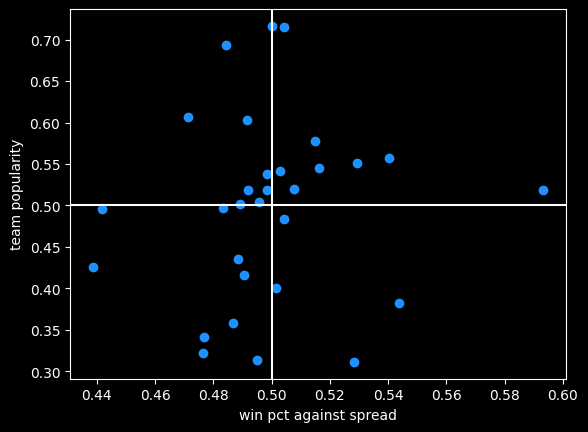

This is a very similar ranking to what I saw in the previous data -- the public don't appear to be picking bets for purely rational reasons. They like to bet on popular teams, good teams, and teams with popular players. They don't like to bet on unpopular or bad teams, even though both sides have the same chance of winning against the spread. It makes sense as an aesthetic choice, but not as a mathematical choice.

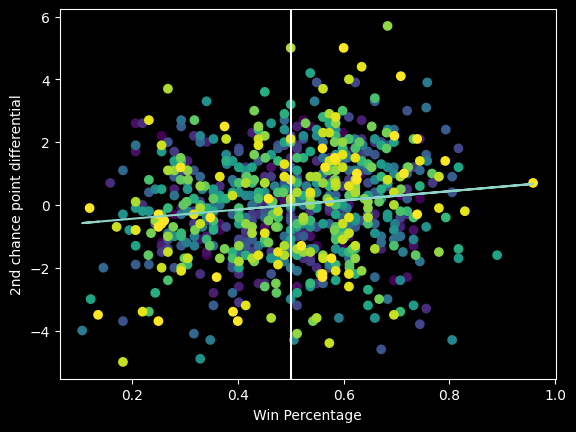

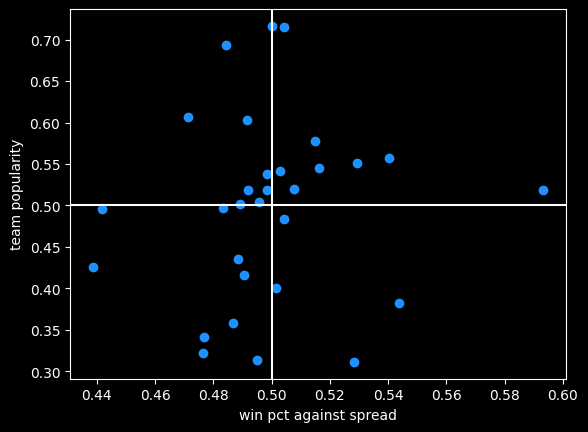

There is a bit of correlation (r = .08) between team popularity and their record against the spread. Bettors do slightly prefer to bet on teams that do well against the spread:

Oklahoma City is that lonely dot far off to the right, far better than every other team but only slightly more popular than average.

Money lines and the favorite/longshot bias

So far I've only been looking at bets against the spread. This new dataset also includes info on money line bets.

In some ways, money line bets are easier to understand -- just bet on the team you think will win. If you like the favorite, you will win less than you risk; if you like the underdog you win more than you risk. If it's say Oklahoma City versus Dallas, the two sides might be OKC -320 (risk $320 to win $100, implying about a 75% chance of winning) and DAL +260 (risk $100 to win $260).

With spread bets, both sides have an equal chance of winning, and are usually priced the same. But with money lines, the gambler doesn't know the true odds, so they don't know the vig they're paying.

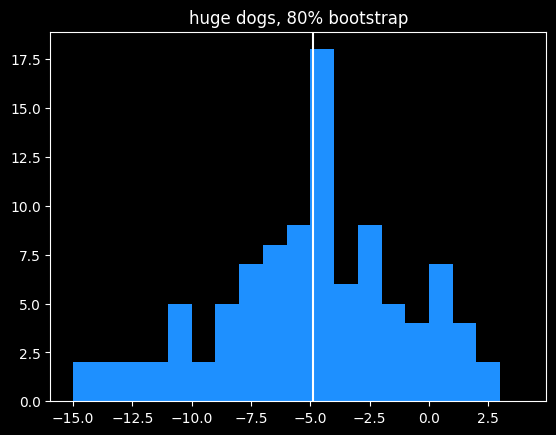

There is data to suggest that underdogs pay more in vig, and are therefore more profitable for the sportsbook. This is called the favorite-longshot bias -- conventional betting wisdom is the expected value of taking an underdog money line bet (especially a big underdog) is lower than taking the favorite. People who bet the underdog are in essence paying a premium to have a more thrilling outcome if they do win.

While it's a documented phenomenon in horse racing, there's some debate as to whether it exists in general, so I analyzed the 4,634 games with money line betting data.

If somebody always bet the favorite on every NBA game, or the underdog, what would be their rate of return? Is it better or worse than the -4.5% rate on spread bets?

I did find evidence of the favorite-longshot bias in the NBA money lines. When risking a constant amount of money on each bet, underdogs had a rate of return of -5.47% versus -4.09% for favorites. Favorites between -200 and -400 offer the best rate of return of any type of money line bet, at -2.97%. Every single type of underdog bet does worse than -4.5% of the typical spread bet.

| label |

start |

end |

num games |

return % |

| all faves |

-9999 |

-1 |

4839 |

-4.09% |

| mild faves |

-200 |

-1 |

2174 |

-4.19% |

| heavy faves |

-400 |

-200 |

1612 |

-2.97% |

| huge faves |

-9999 |

-400 |

1053 |

-5.59% |

| all dogs |

1 |

9999 |

4634 |

-5.47% |

| mild dogs |

1 |

200 |

2440 |

-5.60% |

| heavy dogs |

200 |

400 |

1430 |

-5.69% |

| huge dogs |

400 |

9999 |

764 |

-4.65% |

The number of dogs and favorites aren't equal because some games have money lines where both sides are negative, say -110, like a traditional bet with a spread of zero. There's also a big asymmetry when the odds are long. For instance the favorite might be -800 and the underdog is +561. That doesn't represent a huge vig, it's just a quirk of how American-style odds work (covered in the book).

Caveats and bootstraps

The table above isn't telling the whole story. I'm not conveying a sense of the volatility in the rate of return. Huge underdogs have a bigger payout when they do win, so the outcomes are going to be a lot more variable than betting the favorites.

-4.65% is the best estimate of the rate of return for huge underdogs, but maybe we just got lucky, or unlucky. A couple of longshot bets winning or losing could drastically change the overall return rate.

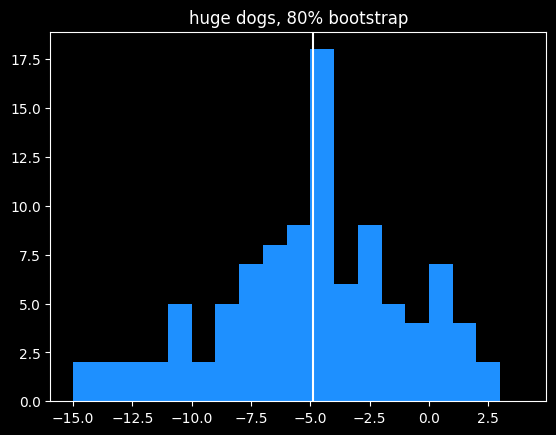

To test volatility, I used bootstrapping, previously covered in one in e. I repeatedly randomly sampled 80% (611/764) of the huge underdog bets and calculated the rate of return on that set. Looking at the range of outcomes helps illustrate the variability of these bets.

Doing that 100 times leads to a pretty wide spread of rates of return:

Although the mean value is close to the overall mean, 15% of bootstrapped samples actually had a positive rate of return. This is similar to what we saw in cool parlay, bro, where due to variance, even gamblers with no advantage over the house had a small chance of making money over a long period of time off of parlays. I described that situation "like a lottery where you have a 12% chance of winning $3,617, but an 88% chance of losing $10,221." This is a similar proposition.

Bridgejumper parlays and p-hacking

We'd need a lot more data to get a crisp estimate of the rate of return on huge underdogs, but we can have more confidence in the estimated -5.59% rate for huge favorites. Huge favorites being disadvantaged kind of contradicts the favorite-longshot bias theory, but it doesn't surprise me at all due to a strange phenomenon I've seen over and over again online.

It's a type of bet sharps might call "bridgejumper parlays" and squares on the internet unironically call "free money parlays" -- a parlay of a whole bunch of heavy favorite money lines together.

The gambler starts with some heavy favorite they like and keep adding more heavy favorites to the parlay till they get plus odds (they can win more than they risk), ending up with 5-6 heavy favorites on the same parlay. They will pick legs of the parlay from different sports, if necessary. There usually aren't enough heavy favorites in the NBA on a particular night to get to the magic > +100 payout.

An example you might see posted on reddit or twitter would be a 6 leg parlay with a payout of +105 like:

- Celtics -600

- Nuggets -900

- OKC -1000

- Lakers -600

- Duke -600

- Some UFC Guy, because they needed a sixth bet -700

I've talked to a couple people who take bridgejumper parlays, and they genuinely seem to think they've found some kind of glitch in the matrix -- all of the legs of the bet have a high chance of winning, therefore the bet is a sure thing.

A sensible person would know that sportsbooks aren't in the business of offering free money so maybe they're missing something. A humble person would assume they probably hadn't discovered some clever trick the sportsbooks don't know about. But most people who get into betting aren't sensible or humble (they think they can see the future, after all), so for a certain class of bettor, this type of wager just feels like a sure thing.

In parlay form, the fallacy seems obvious to me. Just because the individual risk on each leg of the parlay is small, that doesn't mean the combined risk is.

There's a more sophisticated version of this fallacy in statistics called p-hacking. As always, there's an xkcd about it. A particular experiment might have a 5% chance of giving a false result. 5% is a pretty small number. But if you do 13 of those experiments, there's about a 50% chance that at least one of them will give a false result. Even people who are good at math and science probably don't have a good sense of what \(.95^{13}\) equals. It's sort of like a 13 leg parlay of money line bets at -1900.

Next time: more on point totals, money lines, and human nature.

Oct 26, 2025

Song: Pigeonhed, "Glory Bound" (Dave Ruffy Remix)

Code: https://github.com/csdurfee/scrape_yahoo_odds/

A little crowd wisdom

Last week, we saw that for NBA spread bets, the more popular side wins 49.2% of the time. This matches what I've shown before -- the public is generally worse than a coin flip when betting on NBA basketball.

This week, I have some good news. There are some types of bets on the NBA where the public do a little better than expected. Not enough to make money, but better than picking bets by flipping a coin.

Money line bets and rationality

Last time, I looked at money line bets and showed that there is a bias against slight to heavy underdogs, and a possible bias against very heavy favorites. There's a lot I didn't get to, though. What kind of money line bets do the public prefer?

The public takes the home team 63% of the time, which is a little high given the home team wins 55% of games. The public also takes the money line favorite in 96% (4656/4839) of NBA games.

Is that rational or not? We know that favorites are a slightly better deal than underdogs on the money line, so in one sense it is. If someone offered you a free money line bet on the NBA, you should probably pick the favorite. The expected value is less bad than the underdog, so it's a better choice in the long run, and your chances of making some money on the bet are much higher, so it's perhaps a better choice as a single bet as well.

A gambler taking half favorites and half underdogs has a chance at winning 100% of their bets, but someone taking a highly imbalanced number of favorites (or underdogs) will have a whole lot of guaranteed losses. The gambler might correctly pick every single favorite, but close to half of their bets on favorites are still going to be losses.

Getting pigeonholed

Money lines are all at different odds, which complicates things. For this example, let's say the gambler is taking spread bets, with both sides having a roughly equal chance of winning. There are 1230 games in the season, we'll assume the favorite is a slightly better deal, so wins 51% of the time, and the gambler takes the favorite 96% of the time. Over 1230 games, the favorites go 627-603. The gambler takes 1181 favorites and 49 underdogs on the season.

The best they can possibly do on favorites is 627/1181 (53%), assuming they somehow manage to correctly bet on every single favorite that won that season. The gambler captured all of the favorites that won, so the rest of the games left over are underdog winners, meaning they'd go a perfect 49/49 in underdog games. Overall, the best they can do is (627+49)/1230, which is 54.9%. Since the break-even point is 52.4%, most of the profits are coming from the 49 underdog games.

By taking so many favorites, the pigeonhole principle means the gambler has to be perfect just to hit a 54.9% win rate. As I showed in Do you wanna win?, 54.9% is good enough to have a positive expected value on bets, but it's low enough that losing money over 1,230 bets is very possible, even with proper bankroll management. If hitting that number requires you to be perfect, it seems like a bad plan.

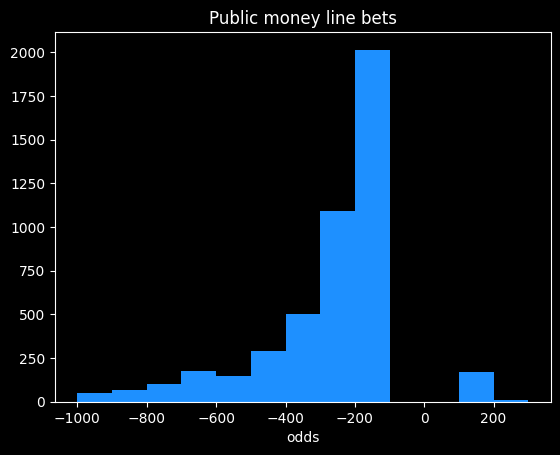

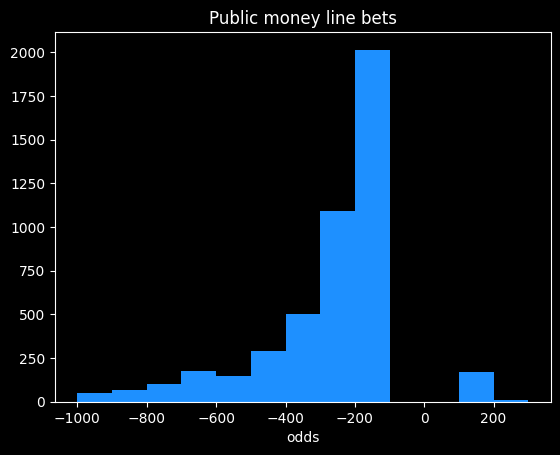

Here are the frequencies of odds taken by the public on the money line. There's an awkward gap in the middle. In the American way of writing odds, favorites are always -100 or smaller, and underdogs are always +100 or bigger.

The public mostly takes bets in the -100 to -300 range, which is in the range where money line bets have been a slightly better value than spread bets over the past four seasons, so good for them. The biggest underdog bet the public took in nearly 5,000 bets was at +400 (implying the underdog had a 20% chance of winning.)

The public achieved -2.87% rate of return on money line bets. That's bad compared to the 0% rate of return offered by not betting, but it's better than the -4.5% on spread bets, and way better than the -20% on some types of parlay bets. Someone fading, or taking the opposite bets to the public, would achieve a -6.4% rate of return.

On the rare occasions the public does take the underdog, they make a profit. On those 183 bets, the public notched a +2.43% rate of return. Bets on underdogs will have a high amount of variance, so there could be some luck involved.

Point totals

In addition to who will win the game, gamblers can wager on how many total points will be scored. For instance, if the final score is 120-115, the point total would be 235. If the line was 230, the OVER side would have won, and the UNDER lost.

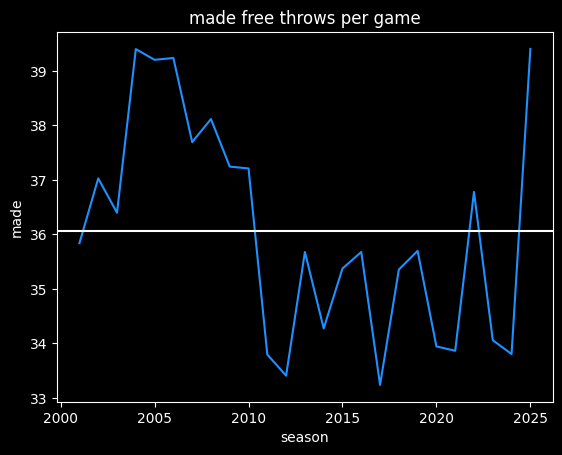

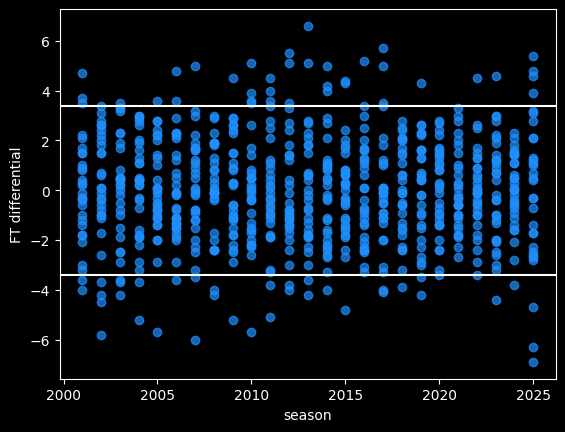

The point totals are pretty balanced. The over wins 50.6% (2672/5273) of the time. However, the public takes the over 88.3% (4660/4839) of the time.

The public did a little better than a coin flip, winning 51% of over bets, and 54.5% of the 178 under bets.

I was curious whether the percent of bets on one side was correlated with higher winning percentage. If 99% of the public took the over, is that a safer bet than if only 51% of the public took it? I've broken down the public's bets on the over by quartiles:

| start % |

end % |

num games |

win % |

| 50 |

73.9 |

1165 |

50.5% |

| 73.9 |

82.4 |

1164 |

51.4% |

| 82.4 |

88.4 |

1163 |

50.9% |

| 88.4 |

100 |

1168 |

51.5% |

For 1,000 observations, a 95% confidence interval is roughly +/- \(\frac{1}{\sqrt{1000}} \approx \frac{1}{\sqrt(33*30)} \approx 3\%\), which is much bigger than the difference between 50.5% and 51.5%. So the difference isn't significant.

Are sportsbooks leaving money on the table?

It's odd to me that the over would win more than 50% of the time when that's the side that usually has the most money on it. That's not a huge imbalance, but the sportsbooks would be making more money if they increased the point totals a bit so the over loses 51% of the time instead.

Gamblers betting on point totals are probably a little more savvy than ones betting the lines. It's kind of an odd thing to bet on. Doesn't matter who won, doesn't matter whether it was a good game or not. All bets are math problems, but point totals are more obviously a math problem than money lines or spreads.

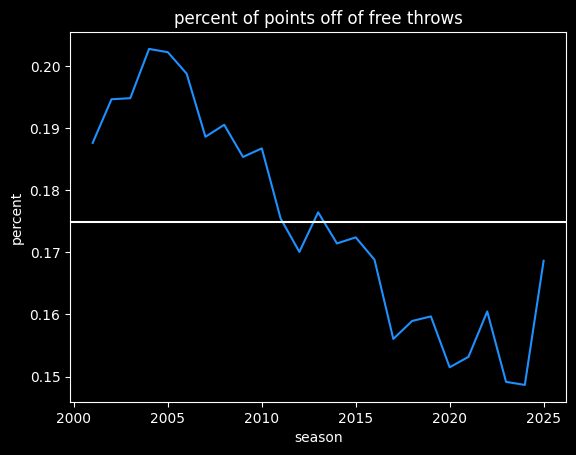

Do point totals tell us anything about the evolution of the NBA?

It's possible sportsbooks haven't kept up with the evolution of the game. NBA point totals have gotten much higher over the past decade due to faster pace, better shooting and more 3 pointers.

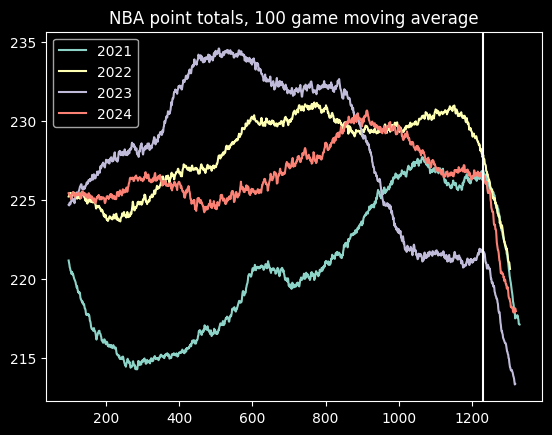

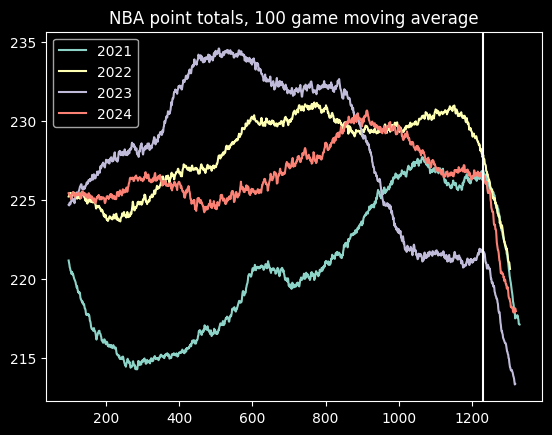

Here is a graph of moving averages of the point totals for the past four seasons:

Point totals go down at the end of every year. That's because playoff games tend to be more tightly contested than regular season games. The vertical line is where the playoffs start.

There appears to be an increase in average point totals during the 2021 season, but it's stayed fairly consistent since then, with one notable exception. There's an big dip in point totals during the 2023 season, around game 800. This is almost certainly due to a directive from the NBA telling officials to call fewer fouls. Fans (and presumably gamblers) noticed right away, but the NBA only admitted the change well after the fact. Luckily, nothing has happened since then to further undermine trust in the NBA!

Nov 05, 2025

Song: Geraldo Pino, "Heavy Heavy Heavy"

Code: https://github.com/csdurfee/scrape_yahoo_odds/. See the push_charts.ipynb notebook.

NBA Push Charts

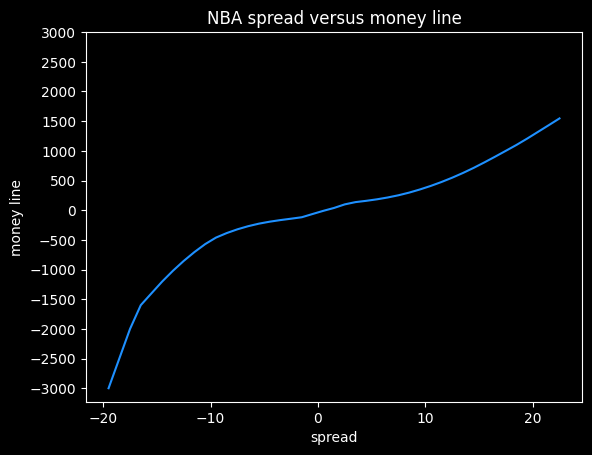

In the past couple of posts, I've looked at NBA betting data from BetMGM. There are multiple types of bets available on every game. A push chart maps the fair price of a spread bet to the equivalent money line bet. How often does a team that is favored by 3 points win the game outright? It's going to be less often than a team favored by 10 points. The money line should reflect those odds of winning.

You can find a lot of push charts online, but they tend to be based on older NBA data. With more variance due to a lot of 3 point shots, and more posessions per game, I wouldn't expect them to still be accurate.

Push charts are useful for assessing which of two different wagers is the better value. Here's an example of a game where different sportsbooks have different lines for the same game:

Say you want to bet on the Warriors. They have some players out tonight (Nov 5, 2025) so they're the underdogs, but they are also playing the dysfunctional Kangz, so it might be a good value bet. You could take the Warriors at either +3.5 -114, +3 -110, or +2.5 -105. Which bet has the highest expected value? If the Warriors lose by 3, the +3.5 bet would win, but the +3 bet would push (the bet is refunded), and the +2.5 bet would lose. A push chart can give a sense of how valuable the half points from +2.5 to +3, and +3 to +3.5, are. Is the probability of the Warriors losing by exactly 3 -- the value of getting a push -- worth going from -105 to -110?

[edit: the Warriors, without their 3 best players, led for most of the game before eventually losing by 5 after Russell Westbrook had one of his best games in years. So the line was only off by 2 points. The lines are often uncannily close, even when a bunch of weird stuff happens. Sometimes it all kinda cancels out.]

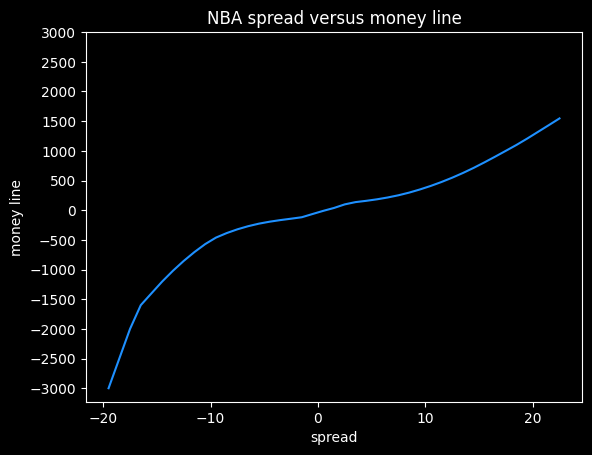

I'm going to do a push chart two different ways, then combine them. For almost every game over the last 4 years, we have the money line and the spread, so we can match them up directly. These will have the vig baked in. And as we saw previously, the money line for favorites has a slightly better expected value than the average bet on the spread. So it's going to end up a little biased.

We can also calculate a push chart without any vig by looking at winning percentage for every spread -- what percent of the time does a +3.5 point underdog actually win the game. It will need some smoothing, since the data will be noisy.

The retail NBA push chart

I matched up the spread and the money line for both the home and the away team on each game, then took the median of those values. Spreads in the range of -0.5 to +0.5 are very rare, as are spreads over -13.5/+13.5, so I've omitted those.

|spread |money line|

|------:|---------:|

| -13.5 | -1000 |

| -12.5 | -750 |

| -11.5 | -650 |

| -10.5 | -550 |

| -9.5 | -450 |

| -8.5 | -375 |

| -7.5 | -300 |

| -6.5 | -250 |

| -5.5 | -225 |

| -4.5 | -190 |

| -3.5 | -160 |

| -2.5 | -140 |

| -1.5 | -120 |

| 1.5 | 100 |

| 2.5 | 115 |

| 3.5 | 135 |

| 4.5 | 155 |

| 5.5 | 180 |

| 6.5 | 200 |

| 7.5 | 240 |

| 8.5 | 290 |

| 9.5 | 340 |

| 10.5 | 400 |

| 11.5 | 475 |

| 12.5 | 525 |

| 13.5 | 625 |

This gives us how BetMGM prices the value of individual points on the spread (with the vig figured in). This is sort of their official price list.

American style odds are symmetrical if there is no vig involved. A -400 bet implies a 4/5 chance of winning, and a +400 bet implies a 1/5 chance of winning. If the -400 bet wins 4/5 of the time and loses 1/5 of the time, the gambler breaks even, and vice versa. So a no-vig money line would be +400/-400.

The odds offered by the sportsbooks are asymmetrical, because they want to make money. We see that +13.5 on the spread maps to a +625 money line, but -13.5 maps to -1000. A +625 bet should win 100/(100+625), or about 14% of the time. A -1000 bet should win 1000/1100, or 91% of the time. Adding those together, we get 91% + 14% = 105%. That extra 5% is called the overround, and is the bookmaker's guaranteed profit, assuming they have equal wagers on both sides.

Here's what that data looks like as a graph, with some smoothing added.

I think this shows the bias against heavy favorites that we saw previously. Note how the line gets much steeper moving from -10 to -20 on the spread, versus moving from +10 to +20.

At -20.5, this graph gives a payout of -5000, but at +20.5, it gives a payout of +1320. The fair payout is somewhere in between those two numbers.

If the fair payout is (-5000/+5000), then the underdog is getting way less of a payout (in essence, paying more in vig) than they should. If the fair payout is closer to (-1360/+1360), then the favorite is paying more in vig than they should. So the asymmetricality gives a clue that sportsbooks aren't splitting the vig down the middle, but it doesn't tell us the fair price.

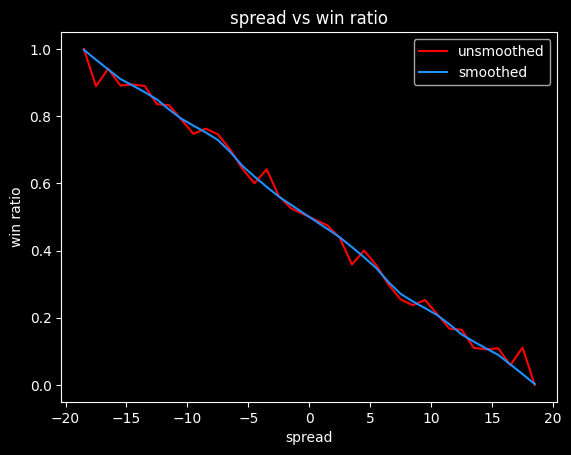

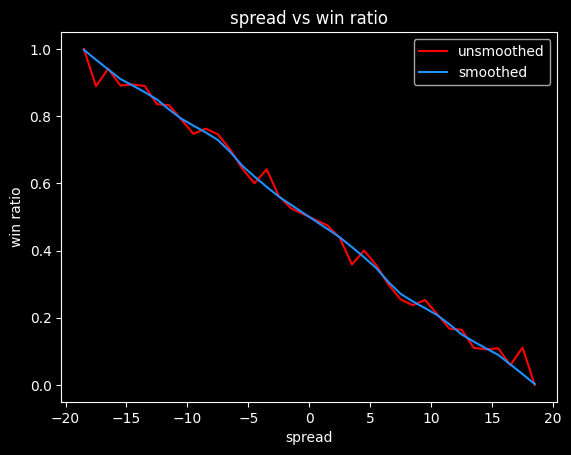

Push charts based on win frequencies

We can estimate a fair price by going through all the games and see how often a +3.5 underdog wins outright, then convert that percentage to a fair money line. The problem here is that the results will be very noisy. We'd need hundreds or thousands of games at each spread value, and we only have 4,500 or so games overall. So it's gonna be chunky. We'll have to do some smoothing on it, (foreshadowing alert: it will lead to weird results later on)

Here are the win ratios by spread, combining both home and away teams:

| spread| win ratio |

|------:|----------:|

| -13.5 | 0.889764 |

| -12.5 | 0.835616 |

| -11.5 | 0.832487 |

| -10.5 | 0.789474 |

| -9.5 | 0.747212 |

| -8.5 | 0.762763 |

| -7.5 | 0.745763 |

| -6.5 | 0.701299 |

| -5.5 | 0.643892 |

| -4.5 | 0.6 |

| -3.5 | 0.641682 |

| -2.5 | 0.562393 |

| -1.5 | 0.525535 |

| 1.5 | 0.474465 |

| 2.5 | 0.437607 |

| 3.5 | 0.358318 |

| 4.5 | 0.4 |

| 5.5 | 0.356108 |

| 6.5 | 0.298701 |

| 7.5 | 0.254237 |

| 8.5 | 0.237237 |

| 9.5 | 0.252788 |

| 10.5 | 0.210526 |

| 11.5 | 0.167513 |

| 12.5 | 0.164384 |

| 13.5 | 0.110236 |

A team with a -1.5 point spread wins 52.6% of the time, which is very close to the break-even point on standard -110 bets of 52.4%. So a gambler needs to be at least 1.5 points better than Vegas at setting the lines to expect to make money.

These odds have to be symmetrical. If a team favored by -4.5 wins 60% of the time, then a +4.5 point underdog has to win 40% of the time.

Unfortunately, this data is probably too chunky to give good results. -4.5 has a lower win rate than -5.5, which doesn't make any sense. The more negative the spread, the higher the win rate should be. Here's what it looks like with smoothing:

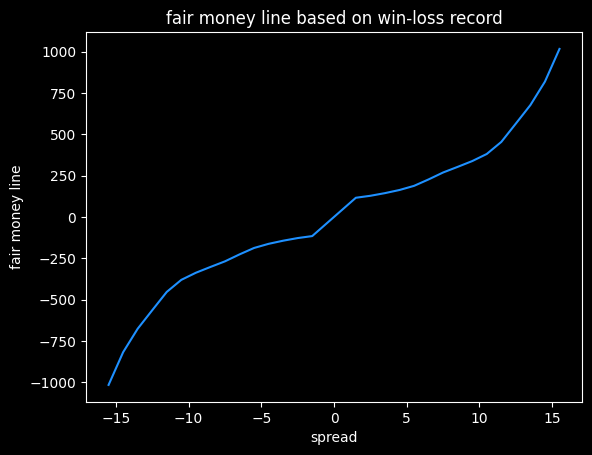

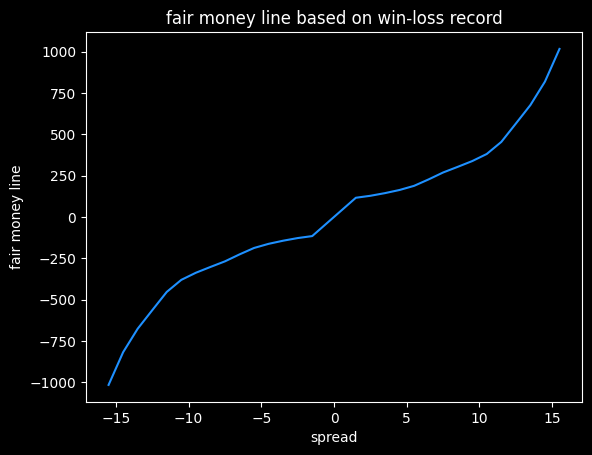

We can convert these winning percentages to fair (no vig) money lines:

Converting to money lines doesn't break the symmetry. A -4.5 favorite gets a money line of -163, and a 4.5 point underdog gets a money line of +163.

This is just a first attempt. Different ways of smoothing the actual win rates will lead to different results.

|spread |fair ML |

|------:|-------:|

| -13.5 | -677 |

| -12.5 | -565 |

| -11.5 | -454 |

| -10.5 | -381 |

| -9.5 | -338 |

| -8.5 | -303 |

| -7.5 | -269 |

| -6.5 | -227 |

| -5.5 | -188 |

| -4.5 | -163 |

| -3.5 | -144 |

| -2.5 | -128 |

| -1.5 | -116 |

| 1.5 | 116 |

| 2.5 | 128 |

| 3.5 | 144 |

| 4.5 | 163 |

| 5.5 | 188 |

| 6.5 | 227 |

| 7.5 | 269 |

| 8.5 | 303 |

| 9.5 | 338 |

| 10.5 | 381 |

| 11.5 | 454 |

| 12.5 | 565 |

| 13.5 | 677 |

Crossing the streams, and an abrupt ending

What happens if we look at the difference between the no-vig moneylines, and what the sportsbook actually charges? Will it show us the favorite-longshot bias?

It turns out, it doesn't. The win frequency data is too noisy. I spent a lot of time spinning my wheels on it, before realizing I didn't have enough data. I will come back to the subject, though, because failure is more educational than success.

Nov 18, 2025

Song: "Round 6", by Prince Jammy

A few interesting statistics from the first dozen games of the 2025-26 NBA season.

I'm generally talking about stats per 100 possessions, rather than raw stats (unless otherwise noted).

The absurd OKC Thunder

The OKC Thunder have been a godless basketball killing machine this year. Almost every win is a blowout, despite their second best player being injured. They look like they don't even have to try all that hard, and they're winning by an average of 16 points.

To me, their secret sauce is that they make it nearly impossible to score against them. There are no easy buckets. Here are some good ways to get easy points in the NBA:

- make lots of 3's

- get lots of free throws (and make them)

- take a lot of shots close to the basket

- get points off of turnovers

- get out on the fast break

- get second chance points

The Thunder are middle of the pack at the first two. They're only 15th in 3 pointers made against them per game, and 12th in free throws given up.

They're ridiculously elite at everything else that makes scoring hard. The Thunder are first in the league at:

- Defensive Rating

- Defensive Rebounding

- Steals

- Fewest opponent fast break points

- Fewest opponent points in the paint

- Fewest opponent points scored

- Lowest opponent effective FG%

They're second in the league at:

- Fewest turnovers

- Fewest opponent points off turnovers

- Fewest opponent 2nd chance points

- Fewest opponent assists

- Most opponent turnovers

The most remarkable part is how they've built their team. Their 3rd and 4th leading scorers, Ajay Mitchell and Aaron Wiggins, were both 2nd round draft picks. Their 5th leading scorer, Isaiah Joe, was a 2nd round pick by the 76ers who got waived, then refurbished by the Thunder like an estate sale armoire. Their best defender, Lu Dort, went undrafted.

The team just finds a way to bring the best out of players that any other team could have had. What did they see that everybody else missed, and what did they do to develop them?

As a fan of another NBA team, and someone who lived in Seattle in the 15 years after the Sonics were stolen away to OKC, I want to get off Mr Presti's Wild Ride. But statistically, it's great.

Bucking trends

Victor Wembanyama is by far the best shot blocker in the NBA, averaging 3.6 blocks a game. But the Spurs are only 6th overall in blocks. Nikola Jokic is by far the best passer in the NBA, but the Nuggets are only 5th overall in assists. Steph Curry is the best 3 point shooter of all time. But the Warriors are only 12th in 3 point percentage. This isn't all that surprising. Just because one player is good at a particular skill, that doesn't mean the rest of the team is.

What's more surprising to me is that Giannis Antetokounmpo draws the most free throws in the league, but the Bucks are 28th in free throw attempts. Teams that get a lot of free throw attempts tend to attack the basket a lot, or be the Los Angeles Lakers. The Bucks are weird because pretty much only Giannis does anything free throw-worthy. At the time I wrote this, Center Myles Turner had not shot a single free throw in his last 63 minutes of game time. That doesn't seem like a recipe for success for the Bucks.

Basketball is broken

And I know the guy who did it: Nikola Jokić. Advanced stats aren't everything, but right now he has a Win Shares per 48 (WS/48) of .441. Win Shares are probably a little biased towards big men who score efficiently, and affected by the pace of the game. That aside, it's a pretty good stat as far as having a single number to quantify how good somebody is at basketball. It correlates pretty strongly with actual basketball watching, I think. The top players in WS/48 are usually the top candidates for the MVP every year. And it matches who we think the best players are historically.

Last year, the top player by WS/48 was Shai Gilgeous-Alexander, at .309. The year before, it was Jokic, at .299. The year before, it was Jokic at .308. In 2014, it was Steph Curry, at .288. In 2004, when the pace of play was slower, the leaders were Nowitzki and Garnett at .248. In 1994, it was David Robinson, at .273.

Pretty much anything over .250 is an MVP caliber season. There's really no historical precedent for a WS/48 of .441. After 12 games played, Jokic could be the worst player in the league for the next 7 games, and he'd still be having an MVP-type season overall.

Before last game, it was .448. What did Jokic do last game that caused his WS/48 to go down a tiny bit? He got 36 points, 18 rebounds, and 13 assists, on good scoring efficiency and only 2 turnovers. That's a slightly below average game for him right now.

The perils of hand-rolled metrics, pt. 137

I was trying to put together something to show how historically off the charts OKC has been defensively. I started with using a fancy technique, PCA, before realizing that just adding up the ranks of each of the statistics was better and simpler. If one team is 1st in blocks, 2nd in steals, 2nd in opponent points in thde paint, etc., just add the ranks up, lowest score is best.

I ran it on every team over the last 15 years. All of the teams that did well on my metric were good defensively, and the teams that did poorly were putrid on defense. It's not totally useless. But it's a bad way to find the best teams of all time.

Here are the top defensive teams since 2010 by this metric:

- the 2025-26 OKC Thunder

- The 2018-19 Milwaukee Bucks (won 60 games with peak Giannis)

- The 2010-11 Philadelphia 76ers (last Iguodala season, young Jrue Holiday)

- The 2019-20 Orlando Magic (Aaron Gordon and some guys)

- The 2017-18 Utah Jazz (the "you got Jingled" meme team that beat OKC)

Ah well. That's not a terrible list. They were all very good at defense, and made it a big part of their team identity, but I don't think those are really the best defensive teams of the last 15 years. A team's rank by Defensive Rating is still a better predictor of the team's win percentage than my attempts.

There's definitely some Goodhart's Law potential here. OKC are near the top of a bunch of statistical categories, because they are good at defense overall. You can't necessarily get on their level just by trying to copy specific things OKC does well, like prevent fast break points.

We see you, Jalen Duren

More like Jalen Durian, because some of the things he's doing are just nasty. You will definitely get kicked off the bus in Singapore if you're watching Jalen Duren highlights.

Data used

All data from https://www.nba.com/stats/

I had to screen scrape some stuff from their website, since some of the endpoints in the python nba_api package are broken now. See the early-nba-trends.ipynb notebook for code.

Nov 19, 2025

Song: Talking Heads, "Cities", live at Montreaux Jazz Festival, 1982

What's going on with the Grizzlies?

The easiest answer is they're miserably bad on offense. It's also the oddest thing about this team to me, since they scored effortlessly last year. The Grizzlies had found something that worked last season. They had the 6th best Offensive Rating, and 10th best Defensive Rating. Considering the Indiana Pacers made it within one game of winning the NBA Championship with the 9th best Offensive Rating and 13th best Defensive Rating, the Grizzlies were definitely a borderline contender.

This year, they're 27th in Offensive Rating, 21 positions worse than last year. (The falloff on defense is a little more understandable, since they have several very good defensive players injured right now.)

eFG+ is a measure of effective FG%, normalized so that 100 is league average. Here are the top 8 Grizzlies players by minutes played the last two seasons:

| Position |

2025 |

2024 |

2025 eFG+ |

2024 eFG+ |

Diff |

| Center |

Jock Landale |

Zach Edey |

108 |

111 |

-3 |

| PF |

Jaren Jackson Jr |

Jaren Jackson Jr |

98 |

101 |

-3 |

| SF |

Jaylen Wells |

Jaylen Wells |

82 |

97 |

-15 |

| SG |

KCP |

Desmond Bane |

77 |

104 |

-27 |

| PG |

Ja Morant |

Ja Morant |

71 |

93 |

-22 |

| Bench 1 |

Santi Aldama |

Santi Aldama |

96 |

106 |

-10 |

| Bench 2 |

Cedric Coward |

Scottie Pippen Jr |

105 |

102 |

3 |

| Bench 3 |

Cam Spencer |

Brandon Clarke |

109 |

115 |

-6 |

Except for rookie Cedric Coward, every single slot is a downgrade. Wells and Aldama have been significantly worse than last season, but the most dramatic is Ja Morant. The only player with around as many minutes played and a lower eFG+ are Ben Sheppard and Jarace Walker of the Indiana Pacers, young players who have been forced into playing a lot of minutes due to injuries.

Where have all the backup PGs gone?

A big problem for the Grizzlies is that they don't really have a backup point guard. They're far from the only team with a lack of PGs on the roster this season.

The Dallas Mavericks have been playing rookie forward Cooper Flagg as PG even though they knew their starting PG, Kyrie Irving, was injured coming into the season. The Nuggets have been experimenting with having forward Peyton Watson as backup PG. The Houston Rockets have no true PG in their "oops, all bigs" starting lineup, though Reed Sheppard is playing more and more off the bench, and looking pretty good.

It's an odd trend to me. Backup point guards have traditionally been cheap and easy to find -- guys like Ish Smith and D.J Augustin. They're like small, functional trucks. They made a ton of them back in the day, but they kinda don't exist anymore, despite how useful and reasonably priced they were. Does that make Yuki Kawamura the Kei truck of this analogy? Yes, yes it does.

The Rockets and Nuggets are doing fine so far without playing a backup PG, but the Grizzlies' situation is just baffling to me. Ja Morant is one of the more injury prone players in the league. You didn't think you needed to find a real backup for him? (Wouldn't Russell Westbrook look good in a Grizzlies uniform?)

The Grizzlies have a stretch of easier opponents coming up, so I think they'll start looking a little better for that reason alone. Maybe they'll get some mojo back. But I'm always about process, rather than outcomes, and I just don't get the Grizzlies' process right now. They had something pretty cool going last year, and now they don't.

Teams can't control a lot of factors. Injuries, who they play on a given night, the bounce of the ball on the rim on a last second shot. There's a lot of luck. But the Grizzlies' problems seem to come down to things the coaching staff and front office can control: vision, planning, vibes, communication, style of play.

Mathletix Bajillion, week 3

One of these teams is random, one is chosen by an algorithm put together by me, a non-football guy. Can you guess which one is which?

All lines are as of Thursday morning.

The Neil McAul-Stars

last week: 1-4, -301

Overall: 6-4, +203

line shopping: +43

- PIT +2.5 -105 (lowvig)

- GB -6.5 +100 (lowvig)

- NO -2 -108 (prophetx)

- TB +6.5 +101 (prophetx)

- PHI -3 -110 (hard rock)

The Vincent Hand-Eggs

last week: 2-2-1, -10

Overall: 3-6-1, -344

line shopping: +16

- CIN +6.5 -107 (rivers)

- CHI -2.5 -105 (lowvig)

- ATL +2 -102 (prophetx)

- BUF -5.5 -101 (prophetx)

- DET -10.5 -102 (prophetx)

Nov 28, 2025

Song: The Jimi Hendrix Experience - Voodoo Child (Slight Return) (Live In Maui, 1970)

Jimmy Butler: still good

Last season, Jimmy Butler quiet quit on his team. He wanted a new contract from the Miami Heat, and they didn't want to give him one, so he just stopped trying. As a fan, it seemed like an annoying and entitled thing to do. He couldn't just play the season out?

Butler eventually ended up getting traded to the Golden State Warriors, who gave him the extension he wanted, and he started trying again.

Setting aside whether Butler was justified, was the extension worth it or not? Butler is 36 years old, an age where it's totally expected for players to start to decline. The NBA salary cap rules now make it so a team can't afford to get a contract as big as Butler's wrong.

The Warriors took a calculated risk, and it paid immediate dividends when Jimmy helped them sneak into the playoffs last year, but the team is about in the same position they were before they got him -- a few high level players, but not in the upper echelon of the league due being old and incomplete.

Jimmy's doing great, though. He's at career highs in True Shooting (TS%) and effective FG percent (eFG%).

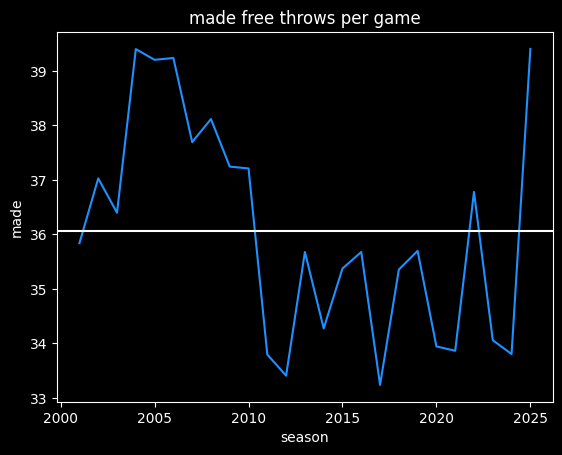

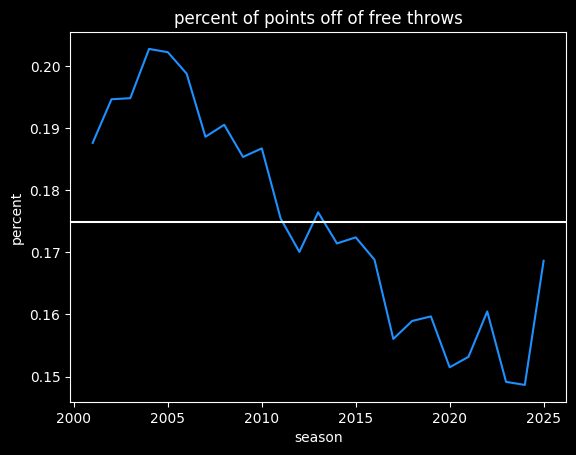

He's always been an efficient scorer, due to his ability to draw a lot of fouls. That means shooting a lot of free throws, which are easy points. Jimmy has been getting about 2.4x the number of free throws per shot attempt compared to the league average.

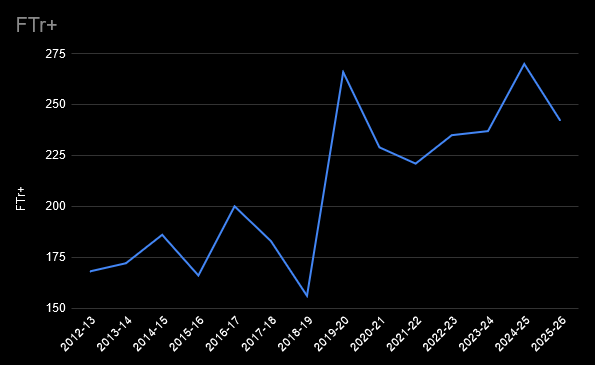

That's about where he's been for several seasons now. His free throw rate saw a huge jump when he moved to the Miami Heat in the 2019-2020 season, and he's maintained that ever since:

The career highs in TS% and eFG% probably aren't sustainable, though. Butler's a career 33% 3 point shooter who's making 45% of them this season. I wouldn't bet on that continuing, but he'll always be valuable on offense if he can draw that many free throws.

Butler's defense is still great, as well. The Warriors are 6.5 points per 100 possessions better on defense when he's on the floor.

He stands out on all the advanced metrics. Right now, he's 4th in WS/48 (behind Jokic, Shai and Giannis), 8th in PER, 8th in VORP, 3rd in Offensive Rating, 8th in Box Plus/Minus, and 11th in EPM.

For the numbers he's putting up, I think he's worth the money.

Ewing theory, 2025 edition

Individuals don't win games, teams do. Sometimes it can be hard to tell how much of player's individual contribution is actually increasing the likelihood of their team winning. And there's always opportunity cost: perhaps a big man would've been more valuable to this team than Butler has been.

When a star player gets injured, sometimes a team plays better, a phenomenon Bill Simmons coined "The Ewing Theory". We're seeing some of that this year.

The Atlanta Hawks are 2-3 this season when star Trae Young plays, and 9-4 when he doesn't.

The Memphis Grizzlies are 4-8 when star Ja Morant plays, and 2-4 when he doesn't. (While the win percentage is the same, the team has looked less hapless in those 6 games.)

The Orlando Magic are 6-6 when star Paolo Banchero plays, and 4-2 when he doesn't.

All three players have distinctive play styles that their team must run to maximize their talents -- to paraphrase James Harden, they are the system. Sometimes maximizing the opportunities for the best player means wasting some of the talents of the other players on the team.

Similar distinctive players like Harden, Jokic and Halliburton are far more essential -- their teams are much worse when they are out, despite the same potential on paper for holding their teams back.

What's the difference between the Hawks, who have been doing better without Trae Young, and the Pacers, who are completely hapless without Tyrese Halliburton? I'm not going to read too much into such small sample sizes, but it's an interesting thing to watch out for.

SGA's FTAs

Debates involving subjects anybody can have an opinion about tend to be much louder than subjects requiring specialized knowledge. It's the law of triviality. The purest form of this in sports is the question of who is the most valuable player. TThe NBA version of this debate is probably the loudest and least interesting of any sport.

There are people who don't know much about basketball or statistics, but will argue endlessly on the internet whether BPM or EPM or RAPTOR or VORP is the right metric for deciding who is the best player. Or rather, they decide on the player they like, then find the statistic that says what they want to hear.

For me, the MVP usually comes down to personal preference -- there are always a handful of players that are clearly better than everybody else, and which one is the most valuable among that set is a matter of taste, and often gets decided by narratives rather than anything rigorous. Perhaps rigor is futile. Pretty much every MVP caliber player is a unique basketball talent. None of them are really interchangeable -- they all break the mold in some way. Any sort of all-in-one number is bound to fail at capturing what makes each one special.

Perhaps because it is a matter of taste and ultimately a very trivial question, people tend to latch onto style points. Who is funnest to watch, who would be the funnest to play with. Who scores points ethically and unethically.

People who think that Shai Gilgeous-Alexander (SGA) shouldn't be the MVP derisively call him FTA, implying he gets awarded more free throws than he deserves, or is otherwise a free throw merchant -- someone who baits defenders into fouling him.

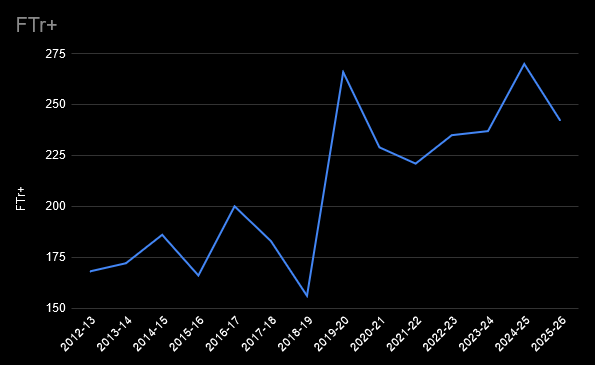

Shai is currently currently the leader in FTAs this year, so the FTA nickname is accurate in one sense. Here are the 10 players with the most free throw attempts this season, plus Jokić (15th place.)

| Name |

Attempts |

FTr |

FTr+ |

PPG |

| Shai Gilgeous-Alexander |

167 |

0.465 |

164 |

32.2 |

| Luka Dončić |

150 |

0.551 |

194 |

34.5 |

| Deni Avdija |

143 |

0.472 |

166 |

24.9 |

| Devin Booker |

141 |

0.416 |

147 |

26.4 |

| James Harden |

139 |

0.488 |

172 |

27.8 |

| Franz Wagner |

136 |

0.471 |

166 |

23 |

| Giannis Antetokounmpo |

132 |

0.532 |

188 |

31.2 |

| Jimmy Butler |

132 |

0.667 |

235 |

19.9 |

| Pascal Siakam |

130 |

0.444 |

156 |

24.8 |

| Tyrese Maxey |

125 |

0.334 |

118 |

33 |

| Nikola Jokić |

116 |

0.395 |

139 |

29.6 |

Shai's FTr+ of 164 indicates he gets 1.64x more free throws per shot attempt than the average player.

In a vacuum, that seems high, but everybody on this list has an FTr+ of over 100. They're all good at drawing fouls. Most high volume scorers are. They score a lot and get fouled a lot for the same reason, they're hard to guard. Jimmy Butler is the king, though -- the only player on the list averaging under 20 points a game, and the only one with an FTr over .600.

Jimmy's getting 2 free throws per every 3 shots he attempts. Since he makes 80% of them, that's a free half point Butler gets every time he attempts a shot. As far as free throw merchants go, he's Giovanni de' Medici.

Compared to his peers, SGA's free throw rate is pretty tame. It's 7th on this list -- lower than Luka Dončić, Deni Avdija, James Harden, Franz Wagner, Giannis, and Jimmy Butler.

It's a little higher than Jokić's, but I don't know how you decide that an FTr+ of 139 is ethical, but an FTr+ of 164 is unethical (or a sign that the refs are in the tank for SGA). Where's the line? Why do other MVP candidates like Luka and Giannis escape criticism, when they draw more fouls per shot than SGA does?

I'll take a deeper dive into the topic some other time, but the fact that Luka's free throw rate took a jump when he got traded to the Lakers is another example of why the NBA stands for Not Beating Allegations.

Mathletix Bajillion, week 4

As usual: one team picks randomly, one team uses a simple algorithm.

Both the mathletix teams have losing records now, but so do all 5 Ringer teams, so it's still anyone's game. We're still saving a lot of (imaginary) money by shopping for lines, instead of taking them at -110. Even though I got lazy with shopping, I still managed to find all 10 bets at reduced juice.

Lines as of Friday morning.

The Neil McAul-Stars

last week: 1-4, -323

Overall: 7-8, -120

line shopping: +60

- MIA -5.5 -104 (prophetX)

- HOU +3.5 -107 (prophetX)

- WAS +6 -108 (prophetX)

- CAR +10 -108 (prophetX)

- NE -7 +100 (prophetX)

The Vincent Hand-Eggs

last week: 3-2, +97

Overall: 6-8-1, -247

line shopping: +33

- LV +9.5 -104 (lowvig)

- CLE +5 -104 (prophetX)

- PHI -7 -108 (prophetX)

- PIT +3 +100 (lowvig)

- WAS +6 -108 (prophetX)

Sources

All data sourced from basketball-reference.com

Dec 02, 2025

Song: Hobo Johnson, "Sacramento Kings Anthem (we’re not that bad)"

Stats are as of 12/1/2025. Spreadsheet here

The Kings

The Sacramento Kings are going through it. Again. For most other teams, the choices they've made recently would be a historically incompetent period, a basketball Dark Ages. For the Kings, it's just another season.

Firing the only coach that's had success with the team in a long time and replacing him with a buddy of the owner who is willing to work cheap. Drafting two All-Star point guards and trading both of them away. Trading for two guys (LaVine and DeRozan) who everybody knew would be a bad fit from their years playing together on the Bulls. Trading the very good, ultra reliable Jonas Valančiūnas for the washed Dario Saric just to save a tiny bit of money.

Do you know the definition of insanity? The Kings' front office doesn't.

Sacramento is 5-16 so far. They're definitely not a good team, but maybe they're not that bad? They went 40-42 last season, which is respectable in the loaded Western Conference. They've had a brutally hard schedule, and 21 games is not a large sample size.

I'll get back to the Kings, but first, the other side of the coin.

The Heat

The Miami Heat were arguably in a worse place than the Kings at the end of last season. They won 37 games, 3 fewer than the Kings, and play in the easier conference. They looked totally checked out by the end of the season, another team stuck in the middle of the NBA standings.

It was a disappointing, dysfunctional run where they traded away their best player and seemed to be going nowhere. The vibes were bad, but they didn't blow up the team for the hope of maybe being good again someday, or run back the same players and the same scheme for another bout of mediocrity. Instead, they trusted the culture they built and tried something innovative.

They've been one of the best stories in the NBA so far, greatly outperforming expectations by playing a radical brand of basketball on the offensive end. They completely overhauled their offense to quickly attack one-on-one matchups before the defense can get set, rather than the traditional approach of creating mismatches using pick-and-rolls.

Here's a good video from Thinking Basketball explaining the strategy, an even more turbo-charged version of the scheme the Grizzlies used to great success last year. As a basketball fan, it's a bit weird to watch at first, because pick and rolls are such an traditional part of basketball, but it's refreshing.

I don't know why more teams don't have the courage to try unconventional things -- or in the Grizzlies' case, stick with something unconventional that was working. In the NBA, it takes a great coach and front office to defy the conventional wisdom and try to get more out of the players already on the roster -- putting them in a position to succeed rather than re-shuffling the deck.

The most remarkable part is that overhauling the offense hasn't sacrificed the Heat's identity as a top-tier defensive team at all. They have the 4th best defense in the league so far by Defensive Rating. They're doing this while playing at the fastest pace in the league this year. Teams that play fast are usually just trying to outscore their opponents, with little attention to defense.

But the Heat are using their scheme to generate high quality offensive opportunities for players that are primarily on the court for their defense. They're building on their core identity rather than changing directions entirely. They're building on strength.

An elite playmaker like Tyrese Halliburton, or a generational talent like SGA or Jokic, can set up defense-first players for easy looks, but most teams that concentrate on defense struggle generating enough offensive firepower. The Orlando Magic have been plagued by that problem for years. The system Miami is running seems like a cheat code for defensively minded teams -- at least until the league inevitably figures out ways to slow it down.

I couldn't find anybody in the media who knew the Heat's scheme change was coming, much less an idea of how much of an impact it would have. I looked at a bunch of preseason power rankings, and they were all pretty down on the Heat for the same reasons, without any hint that they could fix the problems with a different play style. It's much easier to assess the impact of roster changes.

For example, this is from NBC Sports' preseason power rankings:

this was a middle-of-the-pack Heat team last season that made no bold moves, no massive upgrades, leaving them in the same spot they were a year ago.

Here's Bleacher Report's

for an offensively challenged team, replacing [Tyler Herro's] scoring (21.5 points over the last four seasons) and distribution (4.6 assists in the same span) is going to be tough.

And USA Today's:

Losing Tyler Herro for the first two months of the season, potentially, comes as a significant blow to a team that struggled to score — especially late in games — even when he was on the floor.

With the change in style the Heat are still only a mid-tier team on offense, ranking 14th in Offensive Rating. But that's a big step up from last year, when they were 21st, especially given they haven't had their best offensive player for the first month of the season.

Are they going to win a title with the present roster? No, but in addition to giving their fans something to cheer for, all their players will look much better on paper than they did at the start of this season. If they do decide to trade players, the Heat can get more in return for them. And it's not hard to get free agents to move to Miami if the team is winning and the vibes are good, so they could be a real contender again quickly.

It's weird that tanking is seen as the best way to increase the chances of future success in the NBA, rather than building a winning culture and innovating. The two teams with the most success in recent years at doing a major rebuild have been the Spurs and the Thunder. They were both bad for a few years, but they're also two of the best run teams in the league. They draft well, they trade well, they do player development well, they do analytics well. They had a clear vision of the type of team they wanted to build and the clear ability to develop players. If a team doesn't have those organizational competencies, what's the point of a tank? They're just going to waste their high-level draft picks, not develop the rest of the roster, and be mediocre again in 5 years.

Power ranking the power rankers

I collected data from six preseason NBA power rankings. There's a link to the spreadsheet at the top of the article. I would've liked to collect more data, and I'm sure there are other sites that did good NBA power rankings, but stuff like that is basically impossible to find these days, lost in a sea of completely LLM generated baloney or locked behind paywalls. It's not useful interrogating why some LLM stochastically decided that the Warriors are the 4th best team in the NBA this year, but there's seemingly an endless supply of that type of nonsense. Which is to say: thanks for reading this, however you managed to get here. I hope you'll keep coming back for this completely human generated baloney.

I compared the rankings from each list to each team's point differential, which is a better estimate of how good a team is than their win-loss record. How good were our mighty morphin' power rankers at predicting the current standings?

So far, the most accurate ranking has been RotoBaller's, with a Spearman correlation of .76. The worst has been USA Today, at .69. Taking the median rank of all six sources produced a correlation of .74, which was better than 5 out of the 6 individual scores. So we're getting a "wisdom of crowds" effect, which is interesting, since all the rankings are fairly similar to each other. (Previously discussed in Majority voting in ensemble learning.)

I also included rankings based on my own preseason win total estimates. I got a score of .73, right in the middle of the pack. That's respectable, but I can't believe I'm getting beat by the freaking New York Post.

Comparing rankings to records, the power rankers were too high on the Clippers, Cavaliers, Pacers, Kings and Warriors. They were too low on the Raptors, Suns, Heat, Spurs and Pistons.

Strength of schedule

Scheduling matters. Some teams have played much harder schedules than others, and we're dealing with small sample sizes, so win-loss records can be deceiving early in the season.

I grabbed adjusted Net Rating (aNET) data from dunksandthrees, which calculates the offensive and defensive ratings for each team, adjusted for strength of schedule. I also included Simple Rating System (SRS) data from basketball-reference, which is the same idea as aNET, but a different methodology.

The difference between aNET and average point differential gives a sense of which team records might be the most misleading compared to the team's actual skill level. For instance, the Sacramento Kings have already played the Thunder, Nuggets and Timberwolves three times apiece, going 2-7 over those games. Even a decent team would be expected to have a losing record against those opponents.

Based on aNET, the Cavaliers, Warriors, Clippers, Kings and Celtics are probably better than their records indicate.

Going the other way, the Raptors have had a deceptively easy schedule, going 7-1 in games against the woeful Nets, Hornets, Pacers and Wizards. Going 7-1 doesn't tell us much, because those are teams pretty much everybody should be able to beat.

The stats indicate that the Raptors, Hornets, Spurs, Jazz, and Suns are probably not as good as their records.

Most of the teams the power rankers got wrong have been hurt or helped significantly by their schedules so far. The biggest exception has been the Miami Heat, who apparently nobody saw coming, and are probably about as good as their record says they are.

The Kangz

What to make of the Kings? According to basketball-reference, they've had the hardest schedule in the league so far. It's fair to say they're not as bad as the record says.

While they're 28th in point differential, they're 25th by aNET, and 26th by SRS. So they might have 7 or 8 wins instead of 5 if they'd played a league average schedule. That's not that much, though, and a clear step back from last year.

Their rookie, Nique Clifford, has not looked good so far, and they don't have many players that other teams would want in a trade. It doesn't seem like they have any clue of how to develop young talent. Their highest paid player, Zach LaVine, has another year on his contract, doesn't play defense, and has put up a -1.1 VORP this year. They just benched their one big signing of the offseason (Dennis Schröder), and are instead starting Russell Westbrook, a man born during the Reagan Administration playing on a one year minimum deal.

On paper, they don't have much they can do to get better. But everybody was saying that about the Heat at the end of last year, and look at them now. I just can't see the Kings having that type of organizational courage, but I hope they find it somehow rather than spend years on another doomed rebuild. Sacramento fans deserve better than another version of the current mess. At least an innovative mess would be a change of pace from trying the same stupid thing over and over. What's the worst that could happen?